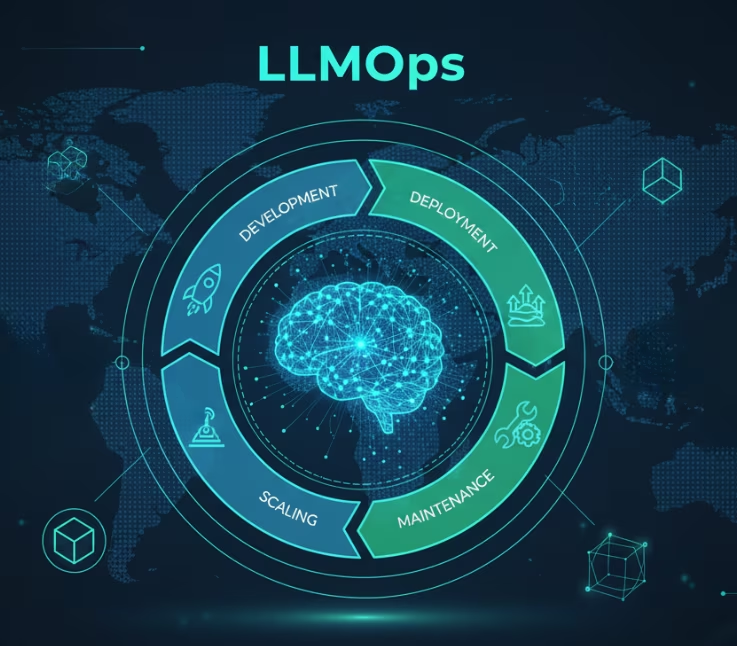

Large Language Model Operations (LLMOps) refers to the practices and tools used to manage the lifecycle of large language models (LLMs). As LLMs like OpenAI’s ChatGPT become increasingly integral to AI-powered products, LLMOps ensures these models are created, deployed, and maintained efficiently, securely, and at scale.

LLMs are changing how AI products are built. Unlike traditional machine learning models, they require specialized operations due to their complexity and versatility. LLMOps addresses the unique challenges of optimizing, securing, and scaling LLMs, making it a critical framework for enterprises and AI teams.

This article will explain what LLMOps is, how it differs from MLOps, and the role it plays in the development and success of AI-powered applications.

What is LLMOps?

Large Language Model Operations (LLMOps) is a methodology for managing, deploying, monitoring, and maintaining large language models (LLMs) throughout their lifecycle, from development to real-world application. It focuses on addressing the unique challenges of LLMs, such as OpenAI’s GPT series, Google’s Gemini, and Anthropic’s Claude, by refining the machine learning operations (MLOps) framework, which itself is an extension of DevOps.

LLMOps has gained significant attention since 2023, as businesses began increasingly exploring generative AI deployments. It plays a crucial role in ensuring that LLMs operate reliably, efficiently, and at scale when integrated into real-world applications.

The main goal of LLMOps is to ensure the smooth operation of LLMs, improving model performance, scalability, and security. The framework offers several key benefits:

- Flexibility: LLMOps enables models to handle various workloads and seamlessly integrate with different applications, making LLM deployments more scalable and adaptable.

- Automation: Like MLOps and DevOps, LLMOps heavily relies on automated workflows and continuous integration/continuous delivery (CI/CD) pipelines. This reduces manual intervention and accelerates development cycles.

- Collaboration: Adopting LLMOps standardizes tools and practices across teams such as data scientists, AI engineers, and software developers. This ensures that best practices and knowledge are shared across the organization.

- Performance: LLMOps integrates continuous retraining and user feedback loops to maintain and improve model performance over time.

- Security and Ethics: The cyclical nature of LLMOps ensures regular security tests and ethical reviews, protecting against cybersecurity threats and promoting responsible AI practices.

LLMOps is critical in building and maintaining high-performance, scalable, and secure AI systems powered by large language models.

How Does LLMOps Work?

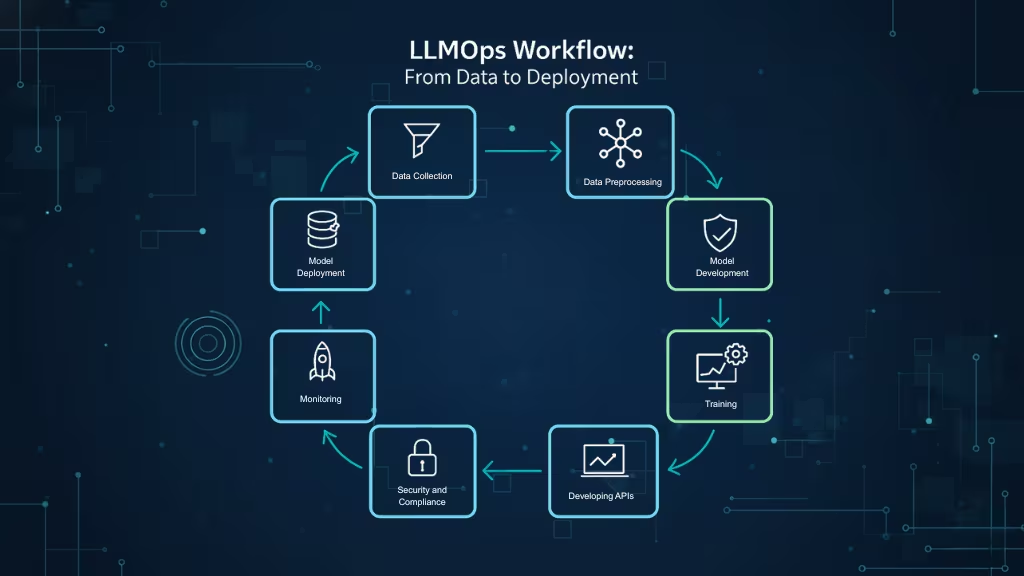

LLMOps is a comprehensive approach for managing the entire lifecycle of large language models (LLMs), from data preparation to deployment and continuous optimization. It integrates best practices at every stage, ensuring that LLMs are efficient, secure, and scalable. Here’s a breakdown of how LLMOps works:

1. Data Collection and Preparation

The first step in LLMOps is data collection and preparation. LLMs require vast amounts of high-quality data to be trained effectively. This data must be sourced, cleaned, and formatted for training. During this stage, LLMOps ensures the data is tokenized, normalized, and free of biases, setting a solid foundation for effective model training. This includes exploratory data analysis (EDA) to gain a deep understanding of the data’s characteristics and creating visualizations and tables for better data insights.

2. Data Preprocessing and Prompt Engineering

Next comes data preprocessing and prompt engineering. Once the data is prepared, it needs to be structured for training. This step often involves labeling, annotating, and organizing the data to ensure it is ready for the model. Prompt engineering is also critical, as it defines how the model will generate responses based on input. Writing effective prompts is crucial because it directly influences the quality of the model’s output, ensuring it produces meaningful and relevant responses.

3. Model Development

Once the data is ready, the model enters the development phase. LLMs are typically trained using techniques like unsupervised learning, supervised learning, and sometimes reinforcement learning. During this stage, LLMOps ensures the model is trained with the appropriate methods, enabling it to learn language patterns and fine-tune for specific tasks like text summarization or question answering.

4. Training and Fine-Tuning

In the training phase, machine learning algorithms are used to help the model understand the patterns in the data. Once the model is trained, fine-tuning is required to optimize its performance. This involves adjusting hyperparameters, tracking errors, reliability, and bias, and evaluating how well the model performs on different tasks. LLMOps uses tools like TensorFlow and Hugging Face Transformers to fine-tune the model, ensuring it specializes in specific domains or tasks.

5. Model Governance

As the model evolves, model governance becomes important. This involves version control, ensuring collaboration among teams, and tracking the model’s safety, reliability, and security. Regular audits for bias and weaknesses are conducted to ensure the model remains both effective and ethical.

6. Model Deployment

Once the model is trained and fine-tuned, it moves to the deployment phase. LLMOps ensures that the model is deployed to a production environment, where it will interact with real-world applications. This involves setting up the necessary infrastructure on cloud platforms or on-premise systems, ensuring smooth integration into business applications. LLMOps also ensures the model is easily updated through CI/CD pipelines, which support fast and efficient deployment of new model versions and improvements.

7. Monitoring and Optimization

After deployment, model management and monitoring become critical. LLMOps ensures continuous monitoring of the model’s performance, tracking metrics like accuracy, response times, and user feedback. Retraining is done periodically to improve the model based on new data, ensuring that it remains accurate and relevant over time. Continuous feedback loops enable real-time optimization, helping the model evolve with changing data and user needs.

8. Developing APIs

To integrate the LLM with other applications, LLMOps supports the development of APIs and API gateways. The API allows the model to communicate with other software, and the API gateway helps manage multiple requests, offering tools for authentication and load distribution. LLMOps ensures that API performance is continuously monitored, ensuring smooth integration and optimal user experience.

9. Security and Compliance

Security and compliance are ongoing concerns throughout the LLMOps lifecycle. Regular security audits, bias mitigation, and compliance checks are performed to ensure the model adheres to data privacy regulations (e.g., GDPR, CCPA) and ethical standards. LLMOps provides frameworks like the AI Risk Management Framework to help organizations identify and mitigate security risks, ensuring that models are not only effective but also safe and compliant.

LLMOps ensures that large language models are developed, deployed, and managed effectively, providing continuous optimization and improvements. From data collection to monitoring and security, LLMOps integrates best practices at each stage, ensuring that LLMs are scalable, reliable, and capable of meeting business needs.

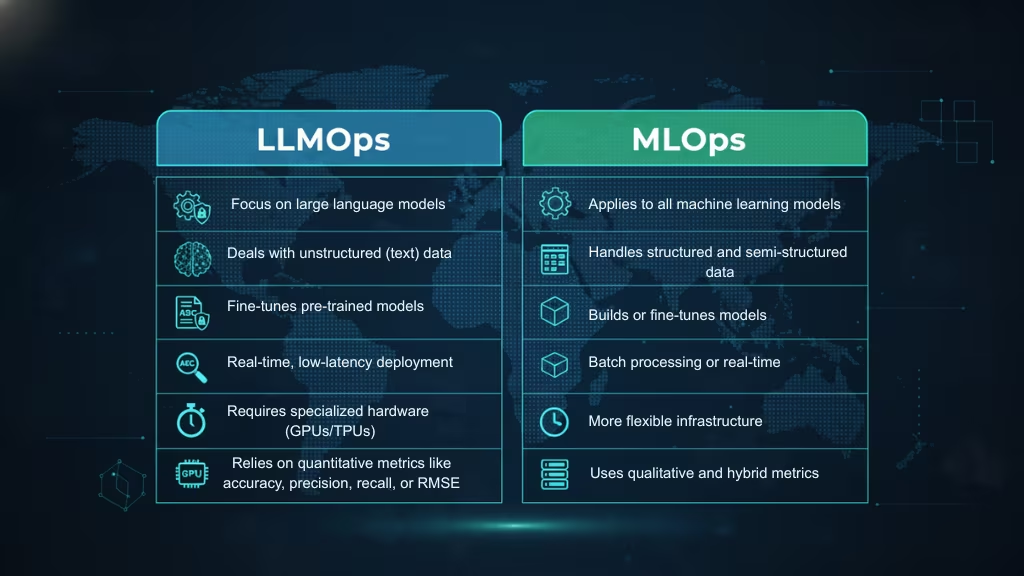

LLMOps vs. MLOps

LLMOps is often considered a specialized branch of MLOps, but it’s crucial to treat LLMOps as a distinct framework. While both share common practices aimed at the management of machine learning models, LLMOps is specifically focused on the unique challenges of managing large language models (LLMs) like OpenAI’s GPT series, Google’s Gemini, or Anthropic’s Claude. These challenges stem from the sheer size, complexity, and real-time application of LLMs, which differ significantly from traditional machine learning models.

Here’s a closer look at how LLMOps differs from MLOps and the specialized needs it addresses:

1. Development and Fine-Tuning

MLOps: In traditional MLOps, models are often developed from scratch, tailored to specific tasks using the organization’s data. Model training, tuning, and fine-tuning are frequently done in-house with clear visibility over the entire training process.

LLMOps: LLMs, due to their size and complexity, are typically pre-trained by large AI companies and fine-tuned for specific tasks or industries. This shift in focus requires LLMOps to concentrate more on adapting these pre-trained models and customizing them with new, domain-specific data. This fine-tuning involves different tools and workflows than those used in MLOps.

2. Hyperparameter Tuning and Cost Optimization

MLOps: In general, machine learning models, hyperparameter tuning focuses on improving accuracy, precision, or other performance metrics. It may involve techniques like grid search or random search.

LLMOps: For large language models, hyperparameter tuning becomes essential for cutting computational costs and improving efficiency. LLMs are computationally expensive, so LLMOps includes tuning techniques that not only improve model performance but also help reduce resource consumption. This could include adjusting batch sizes or optimizing the fine-tuning process to require fewer computing resources.

3. Performance Metrics

MLOps: Performance metrics for traditional ML models are generally straightforward to calculate, such as accuracy, AUC (Area Under Curve), and F1 score.

LLMOps: Performance evaluation in LLMs goes beyond traditional metrics. Common evaluation metrics for LLMs include:

- BLEU (Bilingual Evaluation Understudy): Measures translation accuracy.

- ROUGE (Recall-Oriented Understudy for Gisting Evaluation): Used for assessing summarization tasks.

These metrics focus more on natural language understanding and generation, and require specialized evaluation during both model training and post-deployment phases.

4. Ethical, Security, and Compliance Concerns

MLOps: In traditional machine learning operations, ethical and security concerns primarily focus on data privacy, model fairness, and bias detection.

LLMOps: The complexity of LLMs presents unique ethical challenges, especially with their ability to generate human-like text. Issues such as bias, misinformation, and privacy violations can emerge during model deployment, especially when the models interact with real-world data. Moreover, data provenance, user privacy, and compliance with regulatory standards (like GDPR) become even more pressing with LLMs, requiring continuous monitoring and ethical reviews.

5. Operational and Infrastructure Requirements

MLOps: Traditional machine learning models are usually less resource-intensive and can be trained and deployed on standard hardware like CPUs or even smaller GPU clusters.

LLMOps: LLMs require specialized hardware, such as GPUs or TPUs (Tensor Processing Units), to manage the massive computational power needed for training and real-time inference. They also require distributed computing techniques and a robust infrastructure to scale efficiently, especially when deployed in production environments with low-latency requirements.

6. Model Complexity and Scale

MLOps: Machine learning models in MLOps are typically smaller in size and complexity, and their deployment processes are relatively simpler.

LLMOps: LLMs are inherently more complex and resource-hungry. They require careful management of both model scaling and cost management, particularly in real-time applications like chatbots or search engines. LLMOps must ensure models are efficiently scaled, utilizing techniques such as model quantization, distillation, and pruning to optimize performance and reduce resource consumption without sacrificing accuracy.

7. Deployment and Real-Time Usage

MLOps: In traditional MLOps, models are often deployed for batch processing, where the model can be tested on a dataset and then refined. Deployments are typically more predictable in terms of resource use.

LLMOps: LLMs are typically deployed in real-time environments, interacting with users via chatbots, search engines, or content-generation tools. The real-time nature of these interactions requires LLMOps to ensure low-latency performance, often necessitating advanced optimization strategies.

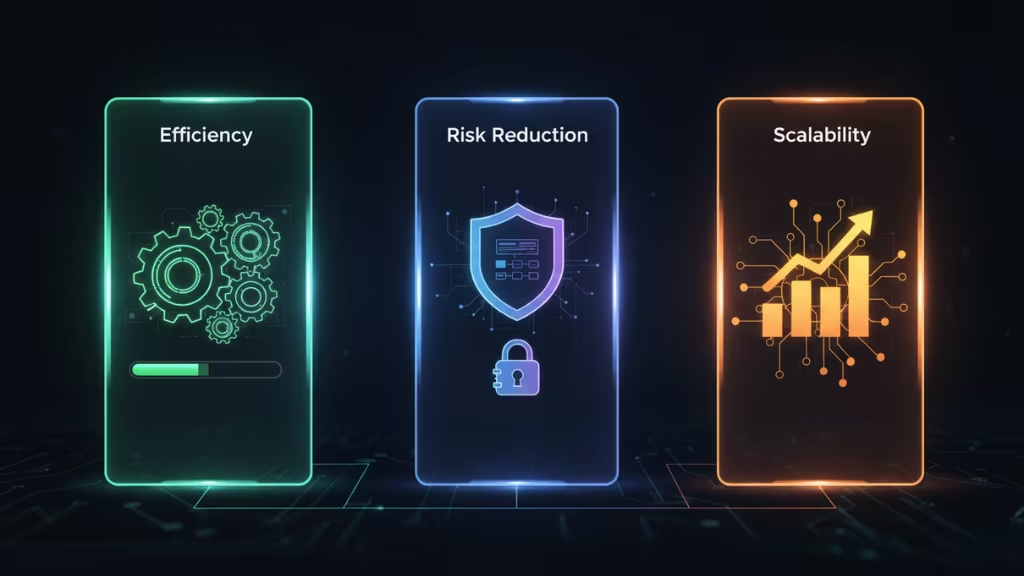

Key Benefits of LLMOps

LLMOps provides organizations with distinct advantages that help streamline the development, deployment, and management of large language models (LLMs). These benefits can be categorized into three major areas: Efficiency, Risk Reduction, and Scalability. Each of these areas plays a crucial role in ensuring that LLMs are optimized for real-world applications.

1. Efficiency

LLMOps enhances the efficiency of the entire model development lifecycle, allowing teams to achieve more with less effort.

First, LLMOps encourages collaboration between key teams such as data scientists, machine learning engineers, DevOps professionals, and business stakeholders. By using a unified platform for communication, model development, and deployment, teams can move faster, make data-driven decisions, and accelerate time-to-market.

In terms of resource optimization, LLMOps helps reduce computational costs by using techniques like model pruning and quantization. These methods help cut down on the hardware needed for training and inference without sacrificing performance. Additionally, LLMOps ensures teams have access to the right hardware resources, such as GPUs or TPUs, optimizing fine-tuning and monitoring processes.

LLMOps also facilitates efficient data management. It encourages robust data sourcing, cleaning, and preparation practices, which ensure that only high-quality data is used for training models. Integration with DataOps ensures a smooth flow of data from ingestion to deployment, helping teams manage data more effectively and make quicker, more informed decisions.

When it comes to hyperparameter optimization, LLMOps allows teams to continuously adjust learning rates, batch sizes, and other parameters, optimizing model performance. This constant refinement, supported by automated iteration and feedback loops, accelerates experimentation and improves results.

Finally, automation plays a critical role in improving efficiency by automating tasks like model training, evaluation, and deployment. This reduces the time spent on manual interventions, accelerates development cycles, and allows for faster testing and iteration.

2. Risk Reduction

LLMOps helps mitigate various risks related to security, compliance, and model performance.

In terms of security, LLMOps ensures that models are protected against vulnerabilities and unauthorized access. By implementing enterprise-grade security measures, LLMOps helps safeguard sensitive data and ensures that it remains protected, following the best practices in data governance and compliance with regulations such as GDPR or CCPA.

When it comes to compliance, LLMOps ensures transparency and simplifies responses to regulatory audits. This helps businesses adhere to strict legal and industry standards. The transparency of LLMOps processes makes it easier to track data usage and ensure that models are functioning in compliance with ethical standards.

Ethical considerations, such as bias mitigation, are also addressed. LLMOps includes regular bias audits and ensures that models are not producing harmful or discriminatory outputs. The framework allows for continuous monitoring and updating to correct any issues as they arise.

Moreover, LLMOps integrates disaster recovery plans and robust monitoring systems to ensure that models remain operational and resilient in case of failure. This proactive approach reduces the risk of model downtime and helps quickly restore systems if disruptions occur.

3. Scalability

As organizations scale their use of LLMs, the ability to manage increased demand and complexity becomes essential. LLMOps enables scalability by optimizing both the performance and infrastructure needed to deploy LLMs at a larger scale.

LLMOps helps optimize model latency, ensuring that LLMs can handle real-time requests efficiently. By using optimization techniques such as model distillation and quantization, LLMOps reduces the computational burden, resulting in faster response times without compromising the quality of the model’s output.

Scalability is also enhanced through CI/CD pipelines, which allow for continuous integration, delivery, and deployment of models. This automation makes it easier to update and improve models without disrupting the user experience, keeping LLMs responsive to changing business needs.

Another significant aspect of scalability is distributed computing. LLMOps enables the management of large-scale deployments, allowing teams to efficiently handle thousands of models simultaneously. This distributed architecture helps businesses scale their operations and maintain performance even as their models handle larger workloads.

Finally, LLMOps supports collaborative model management, fostering a more unified approach between data teams, DevOps, and IT. This reduces conflicts and accelerates release cycles, helping to ensure that LLMs can be scaled quickly and efficiently to meet business demands.

Best practices for LLMOps

LLMOps is a structured approach that spans the entire lifecycle of large language models (LLMs), from development to deployment and continuous optimization. Understanding how LLMOps works in practice is essential for organizations looking to implement these models at scale. Here’s a breakdown of the key stages involved in LLMOps and how they contribute to the smooth operation of LLM-powered systems.

1. Data Management

Data is the foundation of any AI model, and for large language models, this is especially true. LLMOps starts with data management, which involves sourcing, cleaning, and organizing vast amounts of data to train and fine-tune models.

The data needs to be high-quality and well-structured to ensure that the model is capable of making accurate predictions. LLMOps incorporates best practices in data preprocessing, which includes tasks such as removing duplicates, correcting errors, and ensuring that the dataset is representative of the real-world use cases the model will encounter.

Data management also ensures that domain-specific data is integrated into the model, making it more accurate for specific applications, whether that’s customer service, healthcare, or any other industry.

2. Model Training and Fine-Tuning

Once the data is prepared, LLMOps moves into the model training phase. This is where the model is fed the curated data and begins to learn patterns, relationships, and features. For LLMs, training can be highly resource-intensive, requiring substantial computational power, often utilizing GPUs or TPUs.

Unlike smaller models, LLMs often start with a foundation model that is already pre-trained on a large corpus of text. This pre-trained model can then be fine-tuned with domain-specific data to improve its performance for particular tasks, such as natural language understanding or content generation.

LLMOps ensures that hyperparameters such as learning rates and batch sizes are tuned to optimize the model’s performance while balancing the need for computational efficiency. The framework also automates the retraining process, allowing models to improve over time without requiring manual intervention.

3. Deployment and Integration

After the model is trained and fine-tuned, the next step is deployment. LLMOps provides a streamlined process for deploying large language models into production environments, where they will interact with end-users or other systems.

During deployment, LLMOps focuses on ensuring that models are integrated into business applications efficiently. This could include integration into customer service chatbots, search engines, or recommendation systems. LLMOps ensures that the models operate in real-time, with low latency and high availability.

CI/CD pipelines play a critical role in deployment by automating the process of integrating new model versions and updates into production without disrupting user experience. Continuous model monitoring during deployment ensures that models are performing as expected and that issues are detected early.

4. Monitoring and Continuous Optimization

Once the model is live, continuous monitoring becomes critical. LLMOps ensures that models are regularly assessed for performance, accuracy, and efficiency. This includes tracking key performance indicators (KPIs) such as response time, accuracy, and user satisfaction.

Real-time feedback loops allow the model to continuously improve based on user interactions, data input, and evolving business needs. LLMOps ensures that the model remains relevant and up-to-date, adapting to new trends or shifts in user behavior.

In addition to performance monitoring, security checks and ethics reviews are conducted regularly to ensure that the model continues to operate securely and ethically. Any biases or security vulnerabilities detected are addressed through retraining or fine-tuning.

5. Feedback and Iteration

Finally, feedback plays a crucial role in the success of LLMOps. As the model is used in production, it gathers user feedback, which helps identify areas for improvement. This feedback is used to fine-tune the model further and ensure it delivers the best possible performance.

LLMOps ensures that iteration is part of the workflow by enabling continuous retraining of the model based on new data and user interactions. This process helps the model evolve and adapt over time, improving its ability to meet the changing needs of the organization and its users.

The Future of LLMOps

As large language models (LLMs) continue to grow in complexity and capabilities, the future of LLMOps will play a pivotal role in shaping how organizations manage, deploy, and optimize these advanced AI systems. The evolution of LLMOps will be driven by several emerging trends and technologies, addressing the growing demands of real-time applications, scalability, and ethical AI practices.

1. Advanced Automation and AI Integration

The future of LLMOps will see even greater integration of automation in managing the lifecycle of LLMs. Automated model retraining, hyperparameter optimization, and continuous monitoring will become more refined, allowing LLMs to adapt quickly to new data and user needs. This automation will enable AI teams to focus more on high-level tasks and innovation, while the systems handle routine updates and performance tuning.

2. Increased Focus on Ethical AI and Bias Mitigation

As LLMs become more embedded in business and consumer applications, ethical concerns around bias, fairness, and accountability will become even more prominent. LLMOps will evolve to include real-time bias detection and bias mitigation strategies, ensuring that models are continuously monitored for ethical compliance. In the future, AI systems may be equipped with built-in ethical frameworks that help organizations address potential harms before they manifest.

3. Enhanced Collaboration Across Teams

LLMOps will further enhance cross-functional collaboration between data scientists, ML engineers, developers, and IT teams. As AI systems become more complex, this collaboration will be crucial in managing the lifecycle of LLMs, particularly in large enterprises. Unified platforms that streamline communication, model version control, and performance tracking will become more commonplace, improving the speed and accuracy of decision-making.

4. Advanced Infrastructure and Scalability Solutions

With LLMs becoming increasingly resource-intensive, the future of LLMOps will involve the development of advanced infrastructure solutions. These will include edge computing to reduce latency in real-time applications and distributed AI systems that enable the training and deployment of models at scale. As LLMs grow in size and scope, cloud-based AI services and specialized hardware (like TPUs and GPUs) will continue to evolve, allowing LLMOps to keep pace with growing computational demands.

5. Stronger Security and Compliance Protocols

As LLMs become integral to industries such as finance, healthcare, and law, security and compliance will be paramount. The future of LLMOps will include more robust frameworks for data privacy, auditability, and regulatory compliance. We can expect LLMOps to integrate seamlessly with security tools and compliance platforms, making it easier for organizations to meet ever-increasing regulatory requirements and safeguard sensitive information.

6. Real-Time AI and Feedback Loops

In the future, LLMOps will further develop its ability to integrate real-time user feedback into the model’s learning process. With continuous integration/continuous deployment (CI/CD) pipelines and online learning methods, LLMs will be able to adapt instantly based on incoming data. This will lead to more responsive AI systems, where LLMs improve dynamically and in real time, delivering highly personalized and context-aware experiences.

7. Integration of Multimodal Models

As LLMs continue to evolve, we will see multimodal models that can handle not only text but also images, audio, and video. LLMOps will need to adapt to the complexities of managing these multimodal models, ensuring seamless integration and performance across multiple types of data. This will open up new possibilities for applications that require a combination of text, voice, and visual understanding, such as virtual assistants, self-driving cars, and augmented reality systems.

Conclusion

LLMOps is essential for managing the lifecycle of large language models (LLMs) in production. By focusing on efficiency, risk reduction, and scalability, LLMOps ensures that models perform well, adapt to new data, and scale effectively. It helps teams collaborate, automate processes, and continuously monitor and optimize model performance.

Adopting LLMOps is crucial for organizations looking to integrate LLMs into their operations. It allows businesses to deploy AI models securely, efficiently, and with minimal risk while ensuring they continue to provide value over time.

As AI use grows, LLMOps will play an increasingly important role in ensuring that LLMs are reliable, adaptable, and optimized for real-world applications. Organizations that embrace LLMOps will have a competitive edge in scaling and maintaining high-performance AI systems.

FAQs

What is the difference between LLMOps and MLOps?

LLMOps is a specialized subset of MLOps designed specifically for managing large language models (LLMs), whereas MLOps is a broader framework for managing all types of machine learning models. LLMOps focuses on the unique challenges of LLMs, such as handling large-scale data, fine-tuning pre-trained models, and ensuring real-time performance.

Why is LLMOps important for large language models?

LLMOps ensures that LLMs are efficiently deployed, continuously monitored, and securely maintained. It focuses on optimizing model performance, reducing operational costs, and ensuring compliance with regulatory standards, which is crucial for LLMs used in real-world applications.

How does LLMOps help improve model performance?

LLMOps optimizes model performance through continuous retraining, hyperparameter tuning, and real-time feedback loops. It ensures that models stay accurate and relevant by adapting to new data and user interactions.

What are the key components of LLMOps?

Key components of LLMOps include data management, model training and fine-tuning, deployment and integration, continuous monitoring, and security and compliance. These elements ensure that LLMs are developed, deployed, and maintained at scale.

How can organizations scale their LLMs with LLMOps?

LLMOps provides tools to optimize model latency, manage infrastructure efficiently, and ensure that models can handle increased user demand. It integrates CI/CD pipelines to enable rapid updates and improves scalability by supporting distributed computing.

How does LLMOps ensure security and compliance?

LLMOps includes security audits, bias mitigation, and compliance checks to ensure that LLMs meet regulatory standards like GDPR and CCPA. Regular ethics reviews help detect and address issues related to model fairness and user privacy.

What industries benefit most from LLMOps?

Industries like customer service, e-commerce, healthcare, and finance can greatly benefit from LLMOps. It enables businesses in these sectors to deploy AI solutions that handle large amounts of data, provide personalized experiences, and operate at scale.

This page was last edited on 2 December 2025, at 3:53 pm

Contact Us Now

Contact Us Now

Start a conversation with our team to solve complex challenges and move forward with confidence.