Most AI pilots stall before delivering real business impact — with studies showing that only a small fraction of enterprise AI pilots ever scale into production, while the majority fail to produce measurable results. A 2025 study on enterprise AI initiatives found that roughly 95 % of generative AI pilot programs do not deliver measurable business value before stalling, highlighting the gap between experimentation and real-world impact.

This guide explains how to operationalize AI after the pilot phase with an actionable, step-by-step framework to help you overcome common obstacles, avoid typical pitfalls, and confidently move from proof-of-concept to production deployment.

Summary Table: AI Operationalization – Key Steps & Success Factors

| Step | Owner | Essential Tools/Processes | Outcome |

| Align goals & stakeholders | Executive, business | KPI mapping, comms cadence | Unified vision, clear objectives |

| Assess data & infrastructure | Data eng., IT | Data pipeline audit, cloud | Clean, scalable data, ready infra |

| Establish governance & ownership | Compliance, AI lead | Role mapping, documentation | Accountability, regulatory compliance |

| Build/automate MLOps pipelines | MLOps, data science | MLflow, deployment scripts | Reliable, repeatable model ops |

| Drive org. change management | Change leader, HR | Training, incentives | High adoption, minimal resistance |

| Monitor & optimize in production | AI ops, IT | Dashboards, alerting | Sustained value, early issue response |

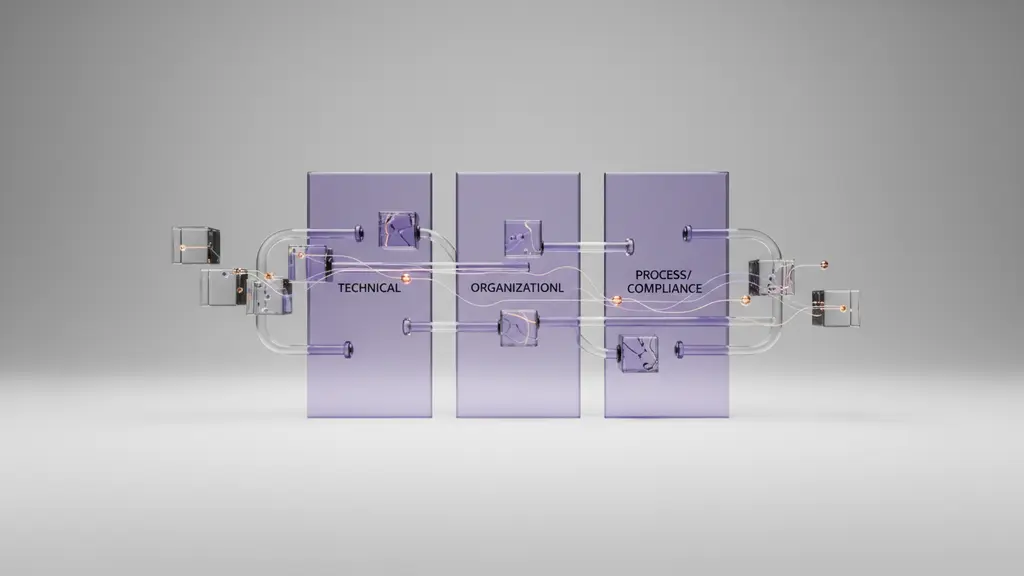

What Technical and Organizational Barriers Prevent AI Pilots from Scaling?

Numerous technical and organizational roadblocks keep AI pilots from becoming scalable, production-grade solutions. Recognizing these early helps you design effective strategies for operationalization.

Key Barriers to Scaling AI Pilots:

- Technical Barriers

- Poor data quality or fragmented datasets

- Integration challenges with legacy IT systems

- Insufficient or inflexible infrastructure (on-premise/cloud limitations)

- Lack of robust MLOps pipelines for deployment and monitoring

- Model drift risks that affect performance over time

- Organizational Barriers

- Executive sponsorship gaps or shifting priorities

- Misalignment between AI project KPIs and business objectives

- Resistance to change or low stakeholder engagement

- Talent shortages in data science, MLOps, or data engineering

- Fragmented project ownership or siloed teams

- Process/Compliance Barriers

- Absence of standardized workflows and documentation

- Compliance confusion (e.g., GDPR, industry standards)

- No framework for continuous improvement or accountability

Top 5 AI Operationalization Barriers (Quick Reference):

| Barrier | Type |

| Data quality/integration | Technical |

| Legacy infrastructure limitations | Technical |

| KPIs misaligned with business | Organizational |

| Lack of executive sponsorship | Organizational |

| Unclear accountability/compliance | Process |

Understanding and addressing these barriers holistically ensures a smoother path from pilot to production.

When Is Your AI Pilot Ready to Move to Production? (Readiness Criteria & Decision Triggers)

Deciding when to operationalize an AI pilot is critical—moving too soon or too late can undermine results. Leaders should use a structured readiness checklist to trigger production deployment.

AI Pilot to Production Readiness Checklist:

- Business KPI Alignment: The pilot has met or exceeded key business metrics (ROI, accuracy, efficiency).

- Technical Stability: The AI model demonstrates consistent, reliable performance with repeatable results in real-world tests.

- Data Governance: Data pipelines, quality controls, and access are fully established and compliant with security/privacy standards.

- Stakeholder Buy-in: End-users and sponsors confirm value, usability, and support for the solution.

- Risk & Compliance Review: Regulatory, ethical, and operational risks are clearly assessed and mitigation measures are in place.

Organizations that operationalize AI based on rigorous readiness criteria reduce deployment risk and maximize business value.

How to Operationalize AI After the Pilot Phase: 6 Essential Steps

A successful transition from pilot to production AI requires a deliberate, six-step operationalization framework. Each step tackles a core challenge in scaling AI.

Step 1: Align Business Goals & Stakeholders

Begin by ensuring all stakeholders—from executive sponsors to business unit leaders—share a common vision for AI success.

- Redefine project objectives post-pilot in line with strategic business priorities.

- Map pilot outcomes to business KPIs (e.g., improved revenue, reduced costs, faster processes).

- Engage stakeholders and users early, building ownership and advocacy for deployment.

- Institute regular communication rhythms to stay aligned and address concerns quickly.

Step 2: Assess and Strengthen Data & Infrastructure Readiness

AI only thrives with robust, scalable data and infrastructure.

- Conduct a data pipeline gap analysis to identify and address data quality, accessibility, or lineage issues.

- Plan infrastructure upgrades—consider cloud migration, API development, and legacy integration as needed for reliable scaling.

- Establish strong data governance: assign stewards, implement access controls, and verify data integrity.

Step 3: Build Out Formal AI Governance & Assign Ownership

Clear governance transforms pilots into sustainable enterprise assets.

- Define clear accountabilities using role maps and cross-functional AI committees.

- Formalize regulatory/compliance reviews, keeping standards like GDPR at the fore.

- Maintain transparent, auditable documentation, including model cards that detail assumptions, limitations, and updates.

Step 4: Implement and Automate MLOps Pipelines

Operational-grade AI demands automated, repeatable processes.

- Automate model deployment, retraining, monitoring, and versioning via MLOps platforms.

- Select fit-for-purpose MLOps tools to unify and scale workflows (see Table: Essential MLOps Platforms).

- Develop model drift detection scripts and incident response playbooks to ensure reliability.

Step 5: Lead Organizational Change Management for Adoption

Change management is as vital as technology.

- Launch targeted user and stakeholder training to build familiarity and skills.

- Align incentives and recognition systems to reward adoption of AI solutions.

- Drive a feedback-centric culture, encouraging continuous improvement and adaptation.

Step 6: Monitor, Optimize, and Maintain AI in Production

Production AI is a living system—continuous monitoring and improvement are essential.

- Monitor business and technical KPIs (accuracy, ROI, user adoption) with real-time dashboards.

- Maintain SLAs and set clear thresholds for AI performance.

- Institute ongoing reviews and optimization cycles, including incident triage and root-cause analysis.

6-Step Checklist: Operationalize AI After the Pilot Phase

- Business & stakeholder alignment

- Data & infrastructure readiness

- Governance & ownership

- MLOps pipeline automation

- Change management & user adoption

- Continuous monitoring & optimization

What Does a Real-World AI Operationalization Journey Look Like?

Seeing these steps in action clarifies what success looks like—and the detours to avoid.

Mini Case Study 1: Financial Services—Automated Risk Assessment

- Before: A major bank piloted an AI-driven loan risk model that demonstrated 23% faster approvals, but siloed deployment limited impact.

- Operationalization: The bank launched a cross-functional AI governance team, integrated model output into core underwriting systems, and retrained staff. According to executives, user adoption rose by 60% in Q2 2025 following targeted change management.

- Lesson: Strong governance and early business-unit alignment unlock widescale value.

Mini Case Study 2: Manufacturing—Predictive Maintenance at Scale

- Before: A manufacturer piloted AI to predict equipment failure, cutting downtime by 12% on a small set of machines.

- Operationalization: Automated data pipelines and MLOps deployment expanded coverage across factories. Model monitoring and rapid incident response ensured consistent benefit.

- Lesson: MLOps and infrastructure readiness are critical for repeatable, scalable ROI.

Expert Insight:

“Organizations that invest in MLOps, role clarity, and change management achieve up to 80% higher returns on AI, according to BCG’s 2026 enterprise AI benchmarking study.”

What Metrics and Monitoring Practices Ensure Production AI Delivers Value?

Maintaining AI impact requires clear, real-time measurement of core business and technical objectives.

Top 6 Production AI Metrics:

| Metric | Description | Owner/Team |

| Business ROI | Financial return or cost savings | Executive sponsor |

| Model Accuracy | Predictive performance in production | Data science/MLOps |

| Uptime/Availability | System stability and reliability | IT/Ops |

| User Adoption | Active usage by intended teams | Change leader/business |

| Model Drift/Alerts | Monitoring for performance degradation | MLOps engineer |

| Incident Response | Time to identify/resolve issues | AI ops/compliance |

Common platforms—such as DataDog, MLflow, and Azure Monitor—enable automated tracking, proactive alerting, and dashboard visualizations. Monitoring for model drift, both statistical (data changes) and conceptual (business context shifts), is crucial for sustained value.

Which Tools, Roles & Structures Enable Enterprise-Scale AI?

Scaling AI post-pilot hinges on assembling the right people, platforms, and organizational frameworks.

Key Roles for Operationalizing AI:

- MLOps Engineer: Builds/maintains deployment pipelines

- Data Steward: Ensures data quality and compliance

- Change Leader: Drives user adoption and training

- AI Product Owner: Manages requirements and value delivery

- Compliance/Risk Manager: Oversees policy and ethical standards

Essential Tools & Platforms:

| Tool/Platform | Core Function |

| MLflow, Kubeflow | Pipeline automation |

| Databricks, Azure ML | Model training/deployment |

| DataDog, Grafana | Monitoring/alerting |

| Collibra, Alation | Data governance |

Team Structure Evolution:

- Pilot Phase: Small, siloed data science and IT groups

- Production Phase: Cross-functional teams integrating business units, MLOps, governance, and compliance

Best-practice organizations also tap external consultants or vendors to accelerate scale, especially for MLOps or AI governance expertise.

What Are the Most Common Pitfalls, and How Can You Avoid Them?

Many AI operationalization efforts fail due to avoidable mistakes. Awareness and prevention are critical.

Top 5 Pitfalls and Prevention Strategies:

- Scope Drift:

Prevention: Set clear business KPIs and limit expansion until first successes are established. - Lack of Executive Owner:

Prevention: Appoint an accountable executive sponsor from project initiation. - Skipping Change Management:

Prevention: Invest in user training, incentives, and feedback loops from the start. - Overlooking Model Drift:

Prevention: Implement automated monitoring and routine model evaluations. - Neglecting Governance:

Prevention: Formalize roles, responsibilities, and compliance processes before launch.

Checklist: Is Your AI Project at Risk?

- Do you have KPIs and an executive sponsor?

- Are organizational and technical owners assigned?

- Is there a documented change management plan?

- Are MLOps practices in place for monitoring and incident response?

- Is data quality and governance verified?

Proactive risk checks keep your AI initiatives on track and set the stage for long-term value.

What Are The Main Barriers To AI Operationalization After A Pilot?

The most common barriers to AI operationalization include technical integration challenges, misaligned business KPIs, and lack of executive sponsorship. Organizations also struggle with weak data infrastructure, unclear governance and compliance processes, and resistance to organizational change when trying to operationalize an AI system.

How Do You Know When An AI Pilot Is Ready To Be Operationalized?

An AI pilot is ready to move forward when it consistently meets defined business KPIs, performs reliably on production scale data, passes security and governance reviews, and has strong stakeholder alignment across business and technical teams.

What Role Does MLOps Play In AI Operationalization?

MLOps is critical to AI operationalization because it enables reliable deployment, monitoring, retraining, and maintenance of models. It ensures repeatable processes, reduces operational risk, and supports scaling AI systems from pilot to production.

Which Teams Are Essential To Successfully Operationalize An AI Solution?

To operationalize an AI solution, organizations need cross functional collaboration among MLOps engineers, data stewards, compliance and risk managers, change management leaders, and business product owners who connect AI outcomes to real business value.

How Do You Measure The Success Of AI Operationalization?

Success is measured by tracking business ROI, model performance and accuracy, system uptime, user adoption rates, incident frequency, and response times through automated dashboards and regular reviews.

How Can Organizations Prevent Model Drift In Production AI?

Preventing model drift requires continuous monitoring for data and concept drift, scheduled model retraining, and clearly defined incident response processes to quickly address performance degradation.

What Does Change Management Look Like In AI Operationalization?

AI operationalization change management focuses on stakeholder engagement, targeted training, ongoing communication, and incentive alignment to ensure adoption and reduce resistance as AI systems become part of daily workflows.

How Long Does It Take To See ROI After AI Operationalization?

ROI timelines vary by use case and industry, but many organizations begin to see measurable returns within 6 to 18 months after successfully operationalizing AI, based on enterprise case studies.

How Should Data Quality Be Managed At Scale During AI Operationalization?

Organizations should appoint data stewards, automate data quality checks, implement lineage tracking, and enforce access controls to maintain data reliability as AI systems scale.

How Is AI Governance Applied In Practice?

AI governance is implemented through clear ownership roles, regular compliance audits, transparent documentation such as model cards, and continuous oversight from an AI governance committee or center of excellence.

Conclusion & Next Steps: Transform AI Pilots Into Business Value

Operationalizing AI after the pilot phase is where organizations unlock genuine, repeatable impact—not just isolated wins. By following this end-to-end, six-step framework, aligning leaders and users, and investing in robust MLOps, governance, and change management, you position your enterprise for sustainable advantage. Proactive action, rigorous criteria, and continual monitoring set your AI efforts apart. Ready to move forward? Download our operationalization checklist or connect with an expert to accelerate your journey from pilot to production.

Key Takeaways

- Over 85% of AI pilots stall due to technical and organizational barriers—operationalization requires strategy and structure.

- A six-step framework (alignment, readiness, governance, MLOps, change management, monitoring) accelerates pilot-to-production success.

- Robust data pipelines, formal governance, and cross-functional teams are essential for enterprise-scale AI.

- Continuous KPIs tracking and incident response keep production AI accountable and valuable.

- Avoidable pitfalls—scope drift, lack of ownership, governance gaps—can be mitigated with early planning and active management.

This page was last edited on 8 February 2026, at 1:43 pm

Contact Us Now

Contact Us Now

Start a conversation with our team to solve complex challenges and move forward with confidence.