- Why and When to Hire Generative AI Developers

- Skills to Look for in a Generative AI Developer

- How to Assess Candidates During Interviews

- Which Generative AI Hiring Model Delivers the Best Return: In-House, Remote, or Hybrid?

- Costs of Hiring Generative AI Developers

- How to Hire the Right Generative AI Developer

- Common Signs of an Unsuccessful Hire

Hiring generative AI developers sounds straightforward until you try to put AI into production. Building a demo is easy. Building something that stays accurate, secure, and affordable once real users show up is not.

That’s where many teams run into trouble. They hire for tools instead of experience, ship fast, and then watch costs climb or quality drop. The problem usually isn’t the idea, it’s the hire.

This guide walks through how to hire generative AI developers the right way: when you actually need one, what skills matter beyond prompts, how to assess candidates, what the real costs look like, and how to spot a mis-hire early before it becomes expensive.

TL;DR

- Generative AI hiring fails when teams hire for demos instead of production systems.

- Hire only when AI must ship, scale, integrate with real data, or meet compliance needs.

- Look for engineers with software, LLM, RAG, and production experience, not prompt-only skills.

- Assess candidates through real-world tasks, system design, and past project breakdowns.

- Total cost is typically 2–3× salary once infrastructure, data, monitoring, and governance are included.

- Mis-hires reveal themselves through unstable systems, rising costs, and no plan for maintenance.

Why and When to Hire Generative AI Developers

You hire generative AI developers to build AI systems that work reliably in real products and real workflows. A prototype can be built with prompts and an API call. A usable system needs engineering.

Why Hiring Generative AI Developers Becomes Necessary

Generative AI developers handle the problems that show up after the demo:

- Grounding outputs in truth: connecting LLMs to internal knowledge through RAG, databases, and search so the model doesn’t make things up.

- Quality control: reducing hallucinations, improving relevance, and setting evaluation metrics so outputs can be measured.

- Tool integration: enabling AI to call APIs, query systems, trigger workflows, and complete multi-step tasks safely.

- Production engineering: building reliable pipelines with logging, retries, rate-limit handling, caching, and fallbacks.

- Cost and latency management: choosing the right model and architecture, controlling token usage, and optimizing inference so usage doesn’t become financially painful.

- Security and governance: protecting sensitive data, preventing prompt injection, and building auditability for compliance and internal reviews.

In short, you hire them to turn “AI that seems impressive” into “AI that holds up in production.”

When It Makes Sense to Hire Generative AI Developers

The right time to hire is when your AI initiative shifts from “Can we do this?” to “Can we run this safely and consistently?”

That usually happens in these moments:

You’re moving from PoC to production.

Once stakeholders want the system in real workflows, you need someone who can engineer stability, monitoring, and long-term maintenance.

Your AI must use company data.

If the system needs to answer questions from internal docs, policies, tickets, knowledge bases, or databases, you’ll need RAG and data orchestration, not just prompts.

The workflow is multi-step, not single-response.

If the AI must plan steps, call tools, update records, or coordinate actions, you need developers who can build reliable orchestration and guardrails.

Risk increases because of users, scale, or regulation.

More users mean more edge cases. Regulated industries add requirements like access control, logging, and audit trails. That needs dedicated engineering.

When Hiring Can Wait

You can delay hiring if you are still in a lightweight exploration phase, such as:

- Testing off-the-shelf AI tools with no integration

- Running internal experiments that don’t affect customers

- Building a demo with no plan for monitoring, scaling, or maintenance

If the goal is learning, a full hire may be premature. But once a system is expected to run continuously, you’ll feel the need fast.

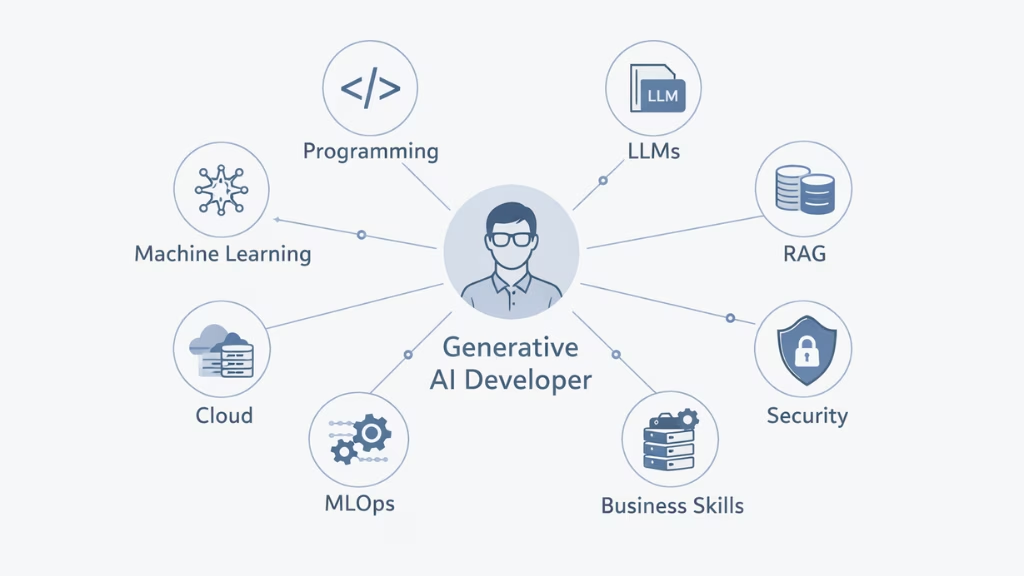

Skills to Look for in a Generative AI Developer

1. Technical Skills

Programming: Python, R.

Machine Learning: Deep learning, neural networks, TensorFlow, PyTorch.

Specific AI: Natural Language Processing (NLP), Computer Vision (image and video).

Large Language Models: Prompt engineering, model evaluation, and fine-tuning basics.

Retrieval Systems: Retrieval-Augmented Generation (RAG), vector databases, and embeddings.

Production Engineering: Model deployment, monitoring, logging, retraining.

A generative AI developer must be comfortable writing production-quality code, not just research scripts. Python is essential for building pipelines, APIs, and integrations, while R is often useful for data analysis and experimentation. The emphasis should be on writing maintainable code that can run reliably in production.

Strong machine learning fundamentals matter because generative AI systems build on them. Developers should understand how deep learning models work, how they fail, and how frameworks like TensorFlow or PyTorch behave in real environments. This knowledge helps them debug issues that cannot be solved by prompt tuning alone.

Most generative AI use cases rely heavily on NLP. Developers should understand text processing, embeddings, semantic search, and language evaluation. In some products, computer vision is also required, for example, analyzing images, video, or multimodal inputs.

Experience with large language models is critical. This includes prompt design, multi-step prompting, managing context windows, and evaluating outputs for consistency and accuracy. A capable developer understands the limits of models and designs around them.

Finally, production engineering separates strong candidates from experimental ones. Developers should know how to deploy models, monitor performance, handle failures, and plan for retraining as data and usage change.

2. Adjacent Skills

Cloud Platforms: AWS, Azure, or GCP.

Infrastructure: GPUs, inference optimization, latency management.

Security: Prompt injection risks, data protection, and access control.

Data Handling: Data quality, versioning, freshness, pipelines.

Generative AI systems are resource-intensive, so developers need a working understanding of cloud infrastructure. They should understand how models are hosted, how usage affects costs, and how to scale services without compromising performance.

Security awareness is essential, especially when AI systems handle sensitive or internal data. Developers should understand common attack vectors and know how to design guardrails that prevent misuse or data leakage.

Data quality plays a major role in output quality. Developers don’t need to be full-time data engineers, but they should understand how stale or poorly structured data directly impacts AI performance.

3. Soft Skills

Business Acumen: Understanding how AI solves real-world problems.

Communication: Explaining technical decisions clearly.

Collaboration: Working with product, legal, and security teams.

Decision-Making: Balancing accuracy, cost, and performance.

A good generative AI developer understands that technology is only valuable when it solves a business problem. They can translate abstract requirements into practical AI solutions and explain trade-offs in simple terms.

Clear communication is especially important in generative AI projects, where outputs are probabilistic and not always predictable. Developers must explain risks, limitations, and design choices to non-technical stakeholders.

Collaboration is also critical. Generative AI systems often touch multiple teams, and successful developers work across functions instead of operating in isolation.

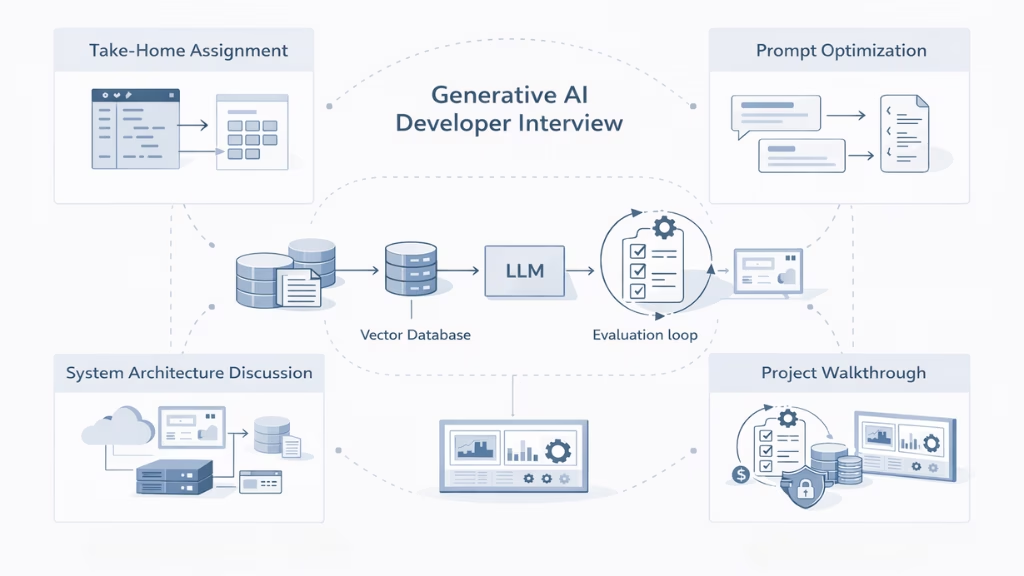

How to Assess Candidates During Interviews

Interviewing generative AI developers requires testing how they think and build in real situations. The examples below show what effective interview exercises actually look like in practice.

1. Take-Home Practical Assignment

Focus: Real-world execution

What to assess: System design, code quality, and trade-off awareness

A good take-home assignment mirrors real work. For example, you might ask the candidate to build a small question-answering system using a set of internal documents. The task could include loading documents, creating embeddings, storing them in a vector database, and querying an LLM to return grounded answers.

Another example is asking them to build a simple AI-powered support assistant that takes a customer query and returns a structured response. The goal is not completeness, but clarity of thinking.

When reviewing the submission, strong candidates explain why they chose a specific model, how they handled errors, and where the system might break at scale. Weak candidates focus only on making it “work once.”

2. Prompt Design and Optimization Exercise

Focus: Understanding LLM behavior

What to assess: Prompt clarity, iteration, and reasoning

One effective exercise is to give the candidate a poorly written prompt, such as a vague summarization instruction, and ask them to improve it. You can then test the prompt on edge cases like long inputs or ambiguous questions.

Another example is asking the candidate to design a prompt that returns structured JSON output for a task like extracting key fields from text. This reveals whether they understand constraints, formatting, and failure handling.

Strong candidates explain why a prompt reduces hallucinations or improves consistency. Weak candidates simply adjust wording without reasoning.

3. End-to-End System Architecture Discussion

Focus: Production readiness

What to assess: Scalability, reliability, and risk awareness

Ask the candidate to design an AI system for a real use case, such as an internal knowledge assistant used by hundreds of employees. They should explain how documents are ingested, how retrieval works, how the LLM is called, and how responses are monitored.

A more advanced example is asking how they would design an AI workflow that updates records in a CRM based on user input. This tests their understanding of tool usage, permissions, and error handling.

Experienced candidates will naturally talk about logging, retries, monitoring, and cost control. Inexperienced ones tend to focus only on the model choice.

4. In-Depth Technical Walkthrough of Past Projects

Focus: Real experience

What to assess: Ownership, depth, and learning

Ask the candidate to walk through a real generative AI system they built. For example, they might describe a RAG-based assistant that initially returned inaccurate answers because of poor chunking or outdated data, and how they fixed it.

Another strong example is when a candidate explains how inference costs spiked after launch and how they optimized prompts, caching, or model selection to control expenses.

If a candidate can discuss failures, trade-offs, and lessons learned in detail, they likely have real production experience. If they only describe demos or ideal outcomes, that’s a warning sign.

Which Generative AI Hiring Model Delivers the Best Return: In-House, Remote, or Hybrid?

There is no single “best” hiring model for generative AI. The right choice depends on how critical AI is to your business, how fast you need to move, and how much internal capability you already have. Each model comes with clear trade-offs.

In-House Generative AI Developers

Hiring in-house works best when generative AI is a core, long-term capability rather than a short-term initiative.

In-house developers have deep context about your product, data, and internal systems. This makes them well-suited for complex integrations, ongoing optimization, and close collaboration with product and engineering teams. Over time, this can lead to stronger ownership and better alignment with business goals.

However, in-house hiring is slow and expensive. Senior generative AI talent is difficult to find, and onboarding can take months. There is also risk: a single hire rarely covers all required areas, such as RAG, MLOps, infrastructure, and security.

Best fit:

Organizations with a clear AI roadmap, long timelines, and the ability to support ongoing AI development internally.

Remote or Contract Generative AI Developers

Remote or contract hiring offers speed and flexibility. It allows teams to access specialized skills quickly without committing to long-term headcount.

This model works well for focused tasks such as building a proof of concept, implementing a specific feature, or validating a technical approach. It is also useful when you need short-term expertise in areas like prompt optimization or RAG setup.

The downside is limited ownership. Contract developers may not fully understand the business context, and knowledge transfer can be weak if the engagement ends too early. Without strong internal oversight, systems can become difficult to maintain.

Best fit:

Short-term projects, early validation, or teams with strong internal leadership who can guide and review the work.

Hybrid Model (Most Common for Generative AI)

The hybrid model combines internal ownership with external execution. Typically, a small in-house team defines requirements, priorities, and architecture, while external or remote developers handle implementation and scaling.

This approach balances speed and control. Internal stakeholders retain strategic direction, while external specialists bring experience and execution power. It also reduces risk by avoiding reliance on a single hire.

Hybrid models are especially effective for generative AI because the work often spans multiple disciplines. No single developer excels at everything, and hybrid teams fill gaps more easily.

Best fit:

Companies are scaling AI beyond pilots, with moderate timelines and a need to manage risk and cost carefully.

Quick Comparison

| Hiring Model | Strengths | Limitations |

|---|---|---|

| In-House | Deep context, long-term ownership | Slow, expensive, narrow skill coverage |

| Remote / Contract | Fast, flexible, specialized | Limited ownership, continuity risk |

| Hybrid | Balanced speed and control | Requires coordination and oversight |

If generative AI is mission-critical and long-term, invest in in-house talent.

If you need speed or niche expertise, use remote developers.

If you want the best balance of execution, cost, and risk, a hybrid model usually delivers the strongest return.

Costs of Hiring Generative AI Developers

Hiring generative AI developers is often underestimated because teams focus on salaries first. In reality, salary is only one component of the total cost. Once infrastructure, data operations, compliance, and long-term maintenance are included, total spend can reach two to three times the initial estimate. This mirrors how enterprises historically underestimated the true cost of cloud and data platform adoption.

1. Pay Ranges by Role and Region

The market for generative AI talent remains tight, and compensation reflects that scarcity.

- United States:

$150K–$250K per year for full-time roles

Contractors typically charge $100–$150 per hour - Europe (UK, Germany, France):

€90K–€160K ($105K–$187K) per year

€80–€120 ($93–$140) per hour for contractors - LATAM & Southeast Asia:

Roughly 20–40% lower than the US and Western Europe for companies hiring remote generative AI developers, though access to senior, production-ready specialists is limited

These figures represent base compensation only. They do not include benefits, recruiting overhead, onboarding time, or the additional roles required to support production AI systems.

2. Key Elements That Determine Overall Cost

Salary alone does not reflect the real cost of hiring generative AI developers. Several structural factors significantly affect total spend.

Compute infrastructure

Running generative AI at scale is expensive. McKinsey estimates global data center capacity must nearly triple by 2030, requiring approximately $5.2 trillion in capital expenditure. Enterprises absorb this through GPU usage, inference endpoints, storage, and networking costs, especially as systems move into production.

Data pipelines

Data preparation typically consumes 15–25% of AI project budgets. This includes cleaning, labeling, augmentation, versioning, and governance. Weak pipelines lead to bias, drift, and rework, increasing costs over time.

Compliance and regulatory support

Hiring developers familiar with frameworks such as the EU AI Act, HIPAA, or India’s DPDP Act commands higher compensation. Enterprises must also fund audit logs, data lineage tracking, risk assessments, and internal reviews, all of which require ongoing engineering effort.

Model licensing and API usage

Foundation model APIs introduce recurring usage costs that grow with scale. High-throughput or regulated systems may require negotiated licensing or on-premise deployment, expenses that are often missing from early budgets.

3. The Hidden Costs

The largest cost surprises appear after deployment, not during hiring.

Retraining pipelines

Generative AI models drift as data, user behavior, and regulations change. Without continuous retraining, accuracy degrades, leading to poor user experience or compliance risk.

Monitoring and observability

Production models must be monitored continuously. Tools such as Arize or WhyLabs introduce ongoing costs that teams frequently underestimate.

Security audits

AI systems expose new attack surfaces. Prompt injection, data leakage, and manipulation attacks are already occurring in real deployments. Security reviews, penetration testing, and red-team exercises must be treated as recurring operational costs.

Explainability and governance

Regulators increasingly require transparency. Implementing explainability frameworks such as SHAP, LIME, or Captum, along with dashboards, audit trails, and legal reviews, adds significant recurring overhead.

Estimated Cost Breakdown for Hiring Generative AI Developers

| Cost Component | Typical Cost Range | What This Covers |

|---|---|---|

| Developer compensation | $80K–$250K / year | Base salary or equivalent contractor cost |

| Recruiting & onboarding | $10K–$30K (one-time) | Hiring fees, onboarding time, and ramp-up loss |

| Compute & infrastructure | $2K–$20K / month | GPUs, inference endpoints, storage, networking |

| Data pipelines & preparation | 15–25% of project budget | Cleaning, labeling, versioning, governance |

| Model/API usage & licensing | $1K–$15K / month | Foundation models, API calls, licenses |

| Monitoring & observability | $500–$5K / month | Drift detection, performance monitoring |

| Security & compliance | $10K–$50K / year | Audits, penetration testing, compliance tooling |

| Retraining & maintenance | Ongoing | Model updates, performance tuning, fixes |

How to Hire the Right Generative AI Developer

Hiring the right generative AI developer is less about finding someone who knows the latest models and more about reducing execution risk. Most hiring failures happen because teams optimize for speed or credentials instead of production readiness.

This process helps avoid that.

Start With the Problem, Not the Role

Before sourcing candidates, be clear about what the AI system needs to do. A developer building a RAG-based knowledge assistant requires different skills than one building autonomous agents or multimodal systems.

Define:

- What data will the system use

- Whether it needs to run in production

- Expected scale, latency, and accuracy

- Security or compliance requirements

This prevents hiring someone who is strong but misaligned with the actual work.

Prioritize Production Experience Over Tools

Many candidates can demonstrate prompt engineering or small demos. Fewer have shipped AI systems that stayed stable over time.

When hiring, prioritize candidates who have:

- Deployed generative AI systems used by real users

- Worked with monitoring, retraining, and cost control

- Dealt with failures, drift, or scaling issues

Tool familiarity changes quickly. Production judgment does not.

Validate Depth Through Real Work

Resumes and portfolios are not reliable indicators in generative AI hiring. Real capability shows up when candidates are asked to:

- Explain past design decisions

- Walk through the trade-offs they made

- Describe what broke and how they fixed it

Candidates who have done real work can explain constraints clearly. Candidates who haven’t usually stay abstract.

Avoid the “One-Person AI Team” Trap

Generative AI systems span multiple domains: backend engineering, data pipelines, MLOps, security, and governance. Expecting one developer to cover everything often leads to fragile systems.

If you’re hiring a single developer, be realistic:

- They should own the core system

- Supporting roles may still be needed

- External help may be required for scale or compliance

Hiring for coverage instead of heroics reduces long-term risk.

Align Incentives and Expectations Early

Many mis-hires happen because success is poorly defined.

Be explicit about:

- What “good performance” means

- How will output quality be measured

- Who owns maintenance and improvement

- How cost and risk trade-offs are handled

Clear expectations help strong candidates succeed and expose weak fits early.

The right generative AI developer is not the one who builds the fastest demo. It’s the one who can build something that still works, stays affordable, and remains trustworthy six months after launch.

Common Signs of an Unsuccessful Hire

Most generative AI mis-hires don’t fail immediately. They fail quietly, through delays, unstable systems, and rising costs. These signals usually appear within the first few months if you know what to watch for.

Strong Demos, Weak Systems

A common warning sign is a developer who can produce impressive demos but struggles to build systems that hold up in real use. If progress stalls once requirements include reliability, data integration, or monitoring, it usually means the candidate lacks production experience.

This often shows up as brittle pipelines, unclear error handling, or frequent rework after deployment.

Over-Reliance on Prompts

Generative AI developers who rely almost entirely on prompt tweaks to fix issues are another red flag. Prompts matter, but they cannot solve problems caused by poor data quality, weak retrieval, or flawed system design.

When accuracy issues are blamed solely on “model limitations,” it often signals shallow system understanding.

Inability to Explain Trade-Offs

Unsuccessful hires struggle to explain why decisions were made. They may describe what they built, but not why it was built that way or what alternatives were considered.

If a developer cannot clearly explain trade-offs between accuracy, latency, cost, and risk, they are unlikely to make good decisions as the system evolves.

No Plan for Maintenance or Drift

Another early sign is the absence of a long-term view. Developers who focus only on initial delivery without discussing monitoring, retraining, or model drift tend to leave systems that degrade quickly.

AI systems are not “set and forget.” Ignoring this reality leads to declining performance and growing technical debt.

Poor Collaboration and Context Awareness

Generative AI work touches product, security, legal, and operations. Developers who work in isolation or dismiss non-technical concerns often slow teams down instead of enabling them.

If collaboration feels difficult early on, it usually gets worse as complexity increases.

Rising Costs Without Clear Justification

Unsuccessful hires often introduce cost growth without being able to explain it. This might include rising API usage, unnecessary model complexity, or inefficient infrastructure choices.

Cost awareness is a core skill. When spending increases without a clear explanation or mitigation plan, it’s a serious warning sign.

Conclusion

Hiring generative AI developers isn’t about keeping up with trends; it’s about making sure your AI systems actually work once they’re in the real world. The difference between success and frustration usually comes down to hiring for production experience, not demos.

When you’re clear about why you’re hiring, realistic about costs, and deliberate in how you assess candidates, generative AI becomes a durable capability instead of an ongoing experiment. When you’re not, the warning signs show up quickly in unstable systems, rising spend, and constant rework.

Treat generative AI hiring as a long-term engineering decision, not a quick add-on. The right hire won’t just help you build faster, they’ll help you build something that lasts.

FAQs: How to Hire Generative AI Developers

When does it make sense to hire a generative AI developer instead of using tools like ChatGPT?

You should hire a generative AI developer when AI must integrate with your own data, run in production, or meet security and compliance requirements. Off-the-shelf tools work for experimentation, but production systems require custom engineering, monitoring, and cost control.

What skills should a generative AI developer have?

A generative AI developer should have strong software engineering skills, hands-on experience with large language models, Retrieval-Augmented Generation (RAG), and production deployment. Prompt engineering alone is not enough; experience with monitoring, scaling, and maintaining AI systems is critical.

Is prompt engineering enough to qualify as a generative AI developer?

No. Prompt engineering is only one part of the role. Production-ready generative AI developers must design systems, integrate data, manage failures, control costs, and maintain models over time. Prompt-only experience usually indicates demo-level knowledge, not production capability.

How do you assess whether a generative AI developer can work in production?

The best way is through real-world assessments. Use take-home assignments, system architecture discussions, and detailed walkthroughs of past projects. Strong candidates can explain trade-offs, failures, and long-term maintenance, not just show working demos.

What interview questions should I ask a generative AI developer?

Ask questions that reveal system thinking, such as how they handle hallucinations, manage retrieval failures, control inference costs, and monitor models in production. Questions about past failures and lessons learned are more revealing than tool-specific questions.

How much does it cost to hire a generative AI developer?

The total cost typically goes beyond salary. While compensation varies, the full cost of hiring generative AI developers often reaches 2–3× salary once infrastructure, data pipelines, monitoring, retraining, security, and governance are included.

What hidden costs should I expect after hiring generative AI developers?

Hidden costs include retraining pipelines, monitoring and observability tools, security audits, and explainability requirements. These costs usually appear after deployment and have the biggest impact on long-term ROI if not planned for early.

What are the common mistakes companies make when hiring generative AI developers?

Common mistakes include hiring for demos instead of production experience, relying too heavily on prompt skills, underestimating infrastructure and maintenance costs, and expecting one developer to cover engineering, MLOps, security, and compliance alone.

How do I know if I’ve made a generative AI mis-hire?

Mis-hires usually show up through unstable systems, rising costs without explanation, poor output quality over time, and no clear plan for monitoring or retraining. These issues often appear within the first few months of production use.

This page was last edited on 5 January 2026, at 10:10 am

Contact Us Now

Contact Us Now

Start a conversation with our team to solve complex challenges and move forward with confidence.