- What Is Generative AI, Really?

- Types of Generative AI

- How Does Generative AI Work?

- Popular AI Generators You Should Know

- A Brief History of Generative AI

- Examples of Generative AI in Action

- What Are the Applications of Generative AI Today?

- Benefits of Generative AI?

- What Are the Risks and Ethical Concerns of Generative AI?

- Where Is Generative AI Going?

Generative AI is a type of artificial intelligence that creates new content like text, images, code, or audio based on patterns it has learned from existing data. It’s rapidly gaining attention for transforming how people work, communicate, and build creative and technical projects.

As Sam Altman, CEO of OpenAI, says: “AI will continue to get way more capable … People are using it to create amazing things. If we could see what each of us can do 10 or 20 years in the future, it would astonish us today.”

The buzz began when tools like ChatGPT and DALL·E showed that AI could write essays, generate images from text prompts, and even compose music or write software. What once felt like science fiction is now embedded directly into everyday apps, enterprise systems, and decision-making workflows. Businesses are investing billions in this technology, and users are finding both productivity gains and new ethical questions to navigate.

But despite the headlines, most people still ask: “What exactly is generative AI, how does it work, and what does it mean for me?”

What Is Generative AI, Really?

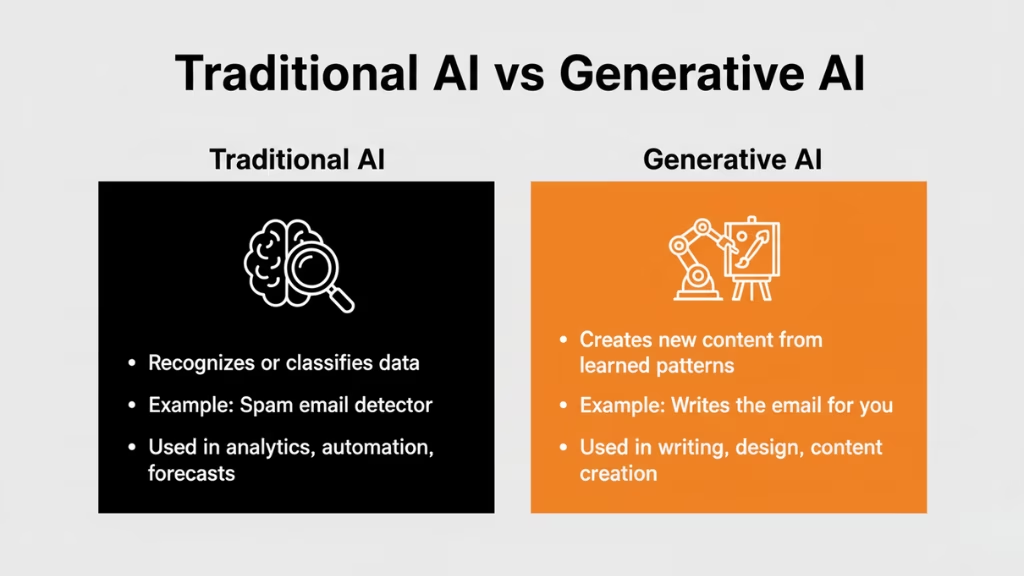

Generative AI is a branch of artificial intelligence designed to produce original outputs such as text, images, audio, code, or video by learning the underlying structure and patterns of large datasets. Unlike traditional AI, which focuses on classification or prediction, generative AI creates new outputs that increasingly resemble human reasoning, creativity, and problem-solving.

At its core, generative AI answers a different question than most machine learning models. Instead of asking, “What is this?” (classification) or “What happens next?” (prediction), it asks, “What could be?” The model learns the structure of data language rules, visual patterns, or coding logic, and generates something new that statistically fits.

How Generative AI Differs from Traditional AI

Traditional AI excels at recognizing patterns and making decisions based on historical data. It’s often used for tasks like detecting spam emails, forecasting trends, or automating simple processes.

In these cases, the AI evaluates what already exists, while modern systems increasingly combine predictive and generative capabilities to both analyze and create outcomes.

Generative AI works differently. Instead of just analyzing data, it creates something entirely new from what it has learned. For example, while a traditional AI might filter out spam, a generative AI can actually write the email for you. It’s used in writing, designing, coding, and other creative tasks where the goal is to produce original content, not just interpret existing information.

Learning Distributions

Generative models work by estimating the probability distribution of the training data. This allows them to generate content that looks, sounds, or behaves like the original data but is entirely new.

For example:

- A generative text model like GPT-4 might complete a sentence or write an article from scratch.

- An image model like DALL·E or Stable Diffusion can turn a sentence like “a futuristic city at sunset” into a unique image.

Where You’ve Already Seen It

You’ve likely interacted with generative AI already:

- Conversational systems that understand context, intent, and multi-step queries

- Generative systems that create visual, written, or multimedia assets on demand

- AI copilots that assist with writing, reviewing, and reasoning about code

The shift is profound: AI is moving from reactive tools to context-aware collaborators capable of supporting real-world goals.

Types of Generative AI

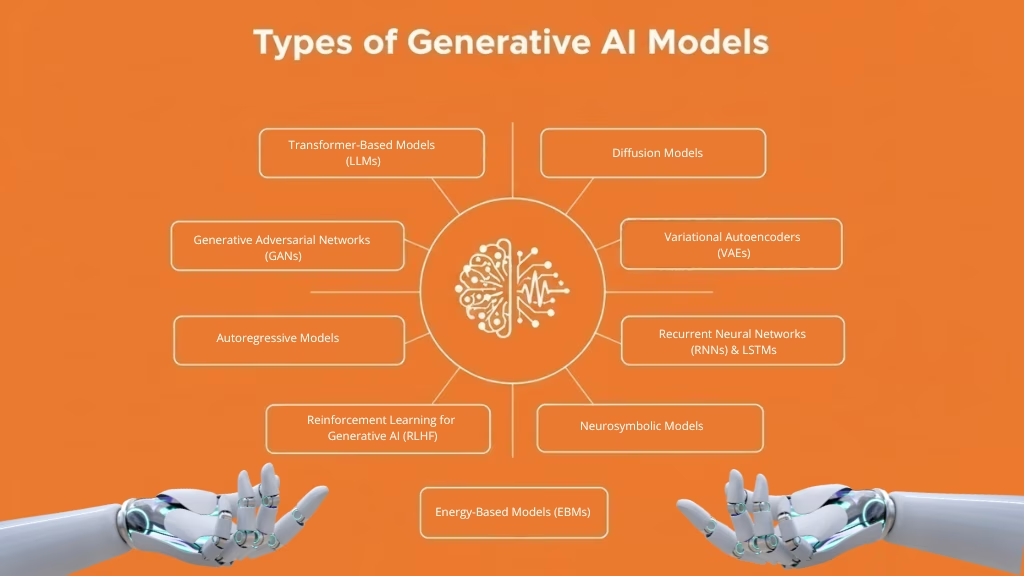

Generative AI is powered by diverse model architectures, each with distinct learning strategies, capabilities, and use cases. From image synthesis to code generation, these models underpin today’s most advanced applications across language, vision, audio, and structured data.

- Transformer-Based Models (LLMs)

- Generative Adversarial Networks (GANs)

- Diffusion Models

- Variational Autoencoders (VAEs)

- Autoregressive Models

- Recurrent Neural Networks (RNNs) & LSTMs

- Reinforcement Learning for Generative AI (RLHF)

Recent advances in generative AI aren’t driven by data alone. They’re the result of major breakthroughs in how AI models are designed. Techniques like generative adversarial networks made AI-generated images more realistic, diffusion models improved control and output quality, and transformer architectures enabled models to understand context at scale. Together, these innovations moved generative AI from experimental research into practical, production-ready systems used across industries.

1. Transformer-Based Models (LLMs)

Use Case: Language generation, code, vision-language models

Key Examples: GPT-4, Claude, Gemini, BERT (understanding), DALL·E (vision)

Transformers are the foundation of modern generative AI, particularly in natural language processing. Introduced by Vaswani et al. in 2017, they use a self-attention mechanism to evaluate relationships between all parts of an input sequence in parallel, allowing for deep context awareness and scalability.

- Architecture: Encoder-decoder or decoder-only (e.g., GPT); uses multi-head self-attention layers

- Strengths: Highly parallelizable, excellent scalability with data/compute, cross-modal adaptability

- Applications: Chatbots, text generation, translation, summarization, code generation, text-to-image

2. Generative Adversarial Networks (GANs)

Use Case: Image and video synthesis, style transfer, synthetic data

Key Examples: StyleGAN, BigGAN, ThisPersonDoesNotExist.com

GANs operate via a two-network game between a generator (that produces synthetic samples) and a discriminator (that judges authenticity). The competition drives both networks to improve, resulting in highly realistic outputs.

- Architecture: Generator + Discriminator trained adversarially

- Strengths: Sharp, photorealistic image outputs; excellent for creativity

- Applications: AI art, synthetic faces, upscaling, deepfakes, super-resolution

3. Diffusion Models

Use Case: Text-to-image generation, photo editing, audio synthesis

Key Examples: Stable Diffusion, DALL·E 2, Google Imagen, Sora

Inspired by thermodynamic diffusion, these models add noise to data over multiple steps and then learn to reverse that noise process to generate clean outputs. They now outperform GANs in image fidelity and diversity.

- Architecture: Forward (noising) and reverse (denoising) stochastic processes

- Strengths: Stable training, high-quality samples, robust to mode collapse

- Applications: Image generation from text, inpainting, video creation, and molecule generation

4. Variational Autoencoders (VAEs)

Use Case: Data variation, anomaly detection, compressed latent modeling

Key Examples: β-VAE, VQ-VAE, NVAE

VAEs encode input data into a probabilistic latent space and decode from it to reconstruct new samples. This structure enables smooth interpolation and controlled generation, though outputs are typically less sharp than GANs.

- Architecture: Encoder → Latent Distribution → Decoder

- Strengths: Interpretability, smooth latent space, probabilistic sampling

- Applications: Anomaly detection, generative design, style variation, unsupervised learning

5. Autoregressive Models

Use Case: Text, time series, image, and audio sequence modeling

Key Examples: GPT-2/3/4 (text), PixelRNN/CNN (images), WaveNet (audio)

These models predict the next token or element one step at a time, conditioned on previous outputs. They are powerful for sequence generation and modeling complex dependencies, but slower in inference due to stepwise decoding.

- Architecture: Sequential generation via next-token prediction

- Strengths: High fidelity, strong local coherence

- Applications: Story and code writing, stock price forecasting, pixel-level image creation

6. Recurrent Neural Networks (RNNs) & LSTMs

Use Case: Sequential data, speech synthesis, music generation

Key Examples: LSTM, GRU, DeepSpeech, early chatbot architectures

RNNs process input data step-by-step while maintaining memory of prior inputs. LSTMs and GRUs improve upon standard RNNs by addressing the vanishing gradient problem, enabling learning over long sequences.

- Architecture: Cyclic hidden state with gates (LSTM)

- Strengths: Effective for temporal data and sequence dependency

- Applications: Speech recognition, real-time translation, predictive typing, melody generation

7. Reinforcement Learning for Generative AI (RLHF)

Use Case: Alignment, dialog optimization, creative generation

Key Examples: ChatGPT (fine-tuned via RLHF), reward-model-tuned assistants

Reinforcement Learning is used to align generative models with human goals. In RLHF (Reinforcement Learning from Human Feedback), a reward model is trained from human preferences and guides further fine-tuning of the base model.

- Architecture: Policy model + reward model + optimizer

- Strengths: Human-aligned outputs, better safety, and relevance

- Applications: Safer chatbots, controllable generation, dialog systems, game level generation

8. Other Emerging Architectures

Normalizing Flows: Learn invertible mappings between data and latent spaces; ideal for exact likelihood estimation

Energy-Based Models (EBMs): Score data using an energy function; still experimental but conceptually powerful

Neurosymbolic Models: Combine deep learning with symbolic logic; promising for explainability and structured reasoning

How Does Generative AI Work?

There are two answers to how generative AI works.

From an engineering standpoint, we know exactly how these systems are built. Developers design the neural network architecture, decide how information flows through it, and engineer the training process that updates the model over time. We can inspect the structure, count the connections, and track how training changes the model’s parameters.

But from a practical standpoint, we still can’t fully explain how a trained model produces specific creative or “smart” outputs. What happens inside the network’s layers during generation is so complex that it’s difficult to interpret in human terms. The results are measurable and repeatable, but the internal process is often more like a black box than a transparent chain of reasoning. In other words, we know the inputs, we know the outputs, and we know the training rules, yet there’s still a “we don’t fully know” gap in the middle.

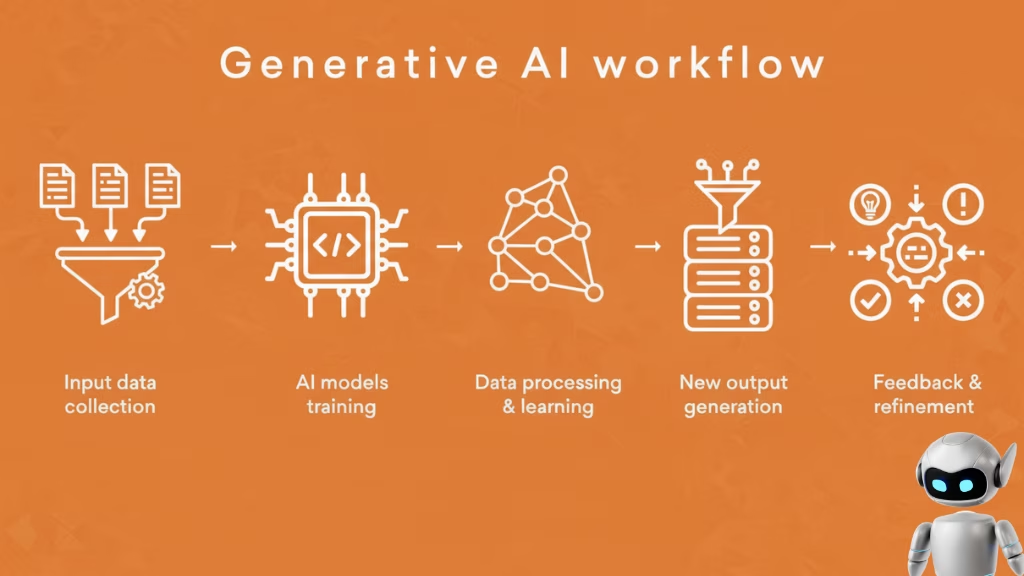

At a high level, generative AI follows five steps:

1. Input Data Collection

Generative AI starts with huge volumes of data, text, images, audio, code, and more. Rather than learning explicit rules, the model learns by exposure: it sees example after example and begins to detect what tends to come next, what tends to belong together, and what patterns repeat across contexts.

A helpful way to think about this is prediction: just as humans learn by constantly anticipating what happens next and adjusting based on reality, generative AI is trained to predict and improve through feedback.

2. AI Model Training

All generative AI models begin as artificial neural networks encoded in software. A simple visual metaphor is a spreadsheet, but stacked into many layers, like a 3D spreadsheet. Each “cell” (neuron) performs a small calculation, and the network passes signals forward layer by layer.

What matters most isn’t the number of neurons, it’s the number of connections between them. Those connections have strengths called weights (also known as parameters). When you hear that a model has billions of parameters, it’s referring to these connection strengths, essentially the adjustable settings the model learns during training.

3. Data Processing and Learning

During training, the model is given a task that is simple in concept but massive in scale: make a prediction.

For a language model, that usually means predicting the next token in a sequence. Importantly, models don’t “see” text as words the way humans do; they see tokens, which can be:

- a full common word,

- part of a word,

- punctuation,

- or even a space plus a few characters that frequently occur together.

To make a prediction, tokens are processed through the network’s layers. Early on, the model performs poorly. But after each attempt, it compares its prediction to the training data and adjusts its parameters using backpropagation. The goal isn’t just to get the right answer once, it’s to make the right answer slightly more likely next time.

That small improvement, repeated across trillions of tokens, is what turns a “newborn” model into a capable generator.

And here’s the key point: we understand this training loop well, but the specific internal representations the model forms across layers are difficult to decode. There’s a lot we can describe mechanically, and a lot we can measure, but what the layers “did” to produce a particular response is still not fully interpretable.

4. New Output Generation

Once trained, the model generates content using the same prediction engine now applied creatively.

In text generation, it takes your prompt as a sequence of tokens, processes them through the layers, and produces a first output token. That token becomes part of the input for producing the next token, and the next, and so on until a full response emerges. This is why the output can feel coherent: each step is conditioned on what came before.

In real-world applications, this step is often strengthened with retrieval-augmented generation (RAG). Instead of relying only on what the model learned during training, the system retrieves relevant information from trusted, private, or up-to-date sources before generating a response. This helps ground outputs in a real context and reduces inaccuracies.

5. Feedback and Refinement

After initial training, models can be refined to better match human expectations. A common approach is reinforcement learning from human feedback (RLHF), where people review outputs and provide feedback (for example, helpful vs unhelpful). That signal is used to further tune the model so it produces responses that are more useful, safer, and more aligned with the desired tone and behavior.

Even with refinement, the central reality remains: we can explain the system’s design and training, and we can evaluate its outputs, but the exact internal path from prompt to response is still complex enough that we can’t fully “read” what happened inside the layers.

While engineers fully understand how these models are built and trained, the internal process that leads to a specific response is often too complex to interpret step by step

Popular AI Generators You Should Know

Generative AI platforms make it easier for individuals and teams to automate content creation, design, coding, and communication tasks. Below are some of the most widely used tools, each specializing in different types of creative output.

ChatGPT (OpenAI)

Best for: Writing, research, and ideation

Built on the GPT (Generative Pre-Trained Transformer) architecture, ChatGPT generates human-like text responses for research, strategy, writing, and communication. It can draft emails, reports, lesson plans, and even technical documentation, making it a go-to assistant for professionals and students alike.

DALL·E 3 (OpenAI)

Best for: Image creation and concept visualization

DALL·E 3 turns written prompts into vivid, detailed images. Designers, marketers, and educators use it to create illustrations, product mockups, and visual storytelling assets that complement written content.

Microsoft Copilot

Best for: Productivity and workflow automation

Integrated across Microsoft 365 (Word, Excel, PowerPoint, Outlook), Copilot uses OpenAI’s models to assist with document drafting, spreadsheet analysis, and presentation design. It’s also available as a stand-alone product, blending generative text with real-time business data.

Google Gemini (formerly Bard)

Best for: Research and creative collaboration

Gemini is Google’s multimodal AI model integrated across its ecosystem, including Docs, Sheets, and Gmail. It combines text generation with image understanding, helping users brainstorm, summarize, and organize information interactively.

Each of these platforms demonstrates how generative AI can streamline daily workflows from content and design to productivity and strategy, all with a few natural-language prompts.

A Brief History of Generative AI

Generative AI has evolved from simple rule-based systems to powerful models capable of producing human-like text, images, and audio. Its development has followed major breakthroughs in deep learning, model architectures, and computing power.

Here’s a high-level timeline of key milestones:

1960s–1980s: Early Generative Attempts

ELIZA (1966): A rule-based chatbot that mimicked conversation using scripts not truly generative, but a precursor to AI dialogue.

Markov Chains: Used in simple text generation (e.g., random sentence assembly based on word probabilities).

2014: The Rise of Deep Generative Models

Generative Adversarial Networks (GANs) were introduced by Ian Goodfellow.

- Revolutionized image synthesis by allowing machines to generate highly realistic visuals.

- Set the stage for creative AI applications in art, gaming, and synthetic media.

2017: Transformers Change the Game

- “Attention Is All You Need” (Vaswani et al.): Introduced the transformer architecture.

- Enabled better understanding of context in sequences, the foundation of modern language models.

2018–2020: Scaling Language Models

- OpenAI GPT-1, GPT-2, GPT-3: Marked the start of massive pretrained models trained on internet-scale text.

- BERT (Google): Focused on understanding language rather than generating it used for search and NLP tasks.

2021–2022: Multimodal and Diffusion Models Emerge

- DALL·E & CLIP (OpenAI): Combined vision and language to generate images from text prompts.

- Diffusion Models (e.g., Stable Diffusion, Imagen): Outperformed GANs in image quality and control.

- Whisper (OpenAI): A generative model for speech recognition and translation.

2023–2025: The Generative AI Explosion

- ChatGPT becomes mainstream (2022): Millions adopt generative tools in work and education.

- GPT-4, Claude, Gemini, LLaMA 2/3: More powerful, multimodal models capable of text, image, code, and voice generation.

- Enterprise & open-source adoption: AI assistants, copilots, and sandbox models enter every major platform.

Each wave of innovation from GANs to Transformers to Diffusion expanded what machines can create. Today’s generative AI is the result of over a decade of breakthroughs layered on one another.

Examples of Generative AI in Action

Generative AI has become a practical co-creator across creative, academic, and business contexts. These examples illustrate how users apply GenAI tools to boost productivity and creativity in real-world scenarios.

Content Writing and Editing

- Generate blog posts, ad copy, or essays in specific tones and lengths

- Rewrite or summarize text for clarity and conciseness

- Create outlines for research papers, resumes, and marketing briefs

Example: Using ChatGPT to produce a first draft of a report or social media post, which you then refine manually.

Multimedia and Localization

- Add subtitles or dubbed audio to educational videos, films, and tutorials

- Generate voiceovers or translations for international audiences

- Enhance learning accessibility through automated captioning

Example: An e-learning company using AI dubbing tools to translate training materials into multiple languages.

Programming and Technical Tasks

- Generate simple code snippets or starter frameworks

- Improve existing code through optimization and debugging suggestions

- Automate repetitive tasks such as documentation or test case generation

Example: Developers using GitHub Copilot to complete code faster and reduce manual workload.

Music, Design, and Creative Production

- Compose melodies or instrumentals in specific genres or moods

- Generate album art, logos, or promotional graphics from prompts

- Combine text, audio, and visuals for integrated media projects

Example: Artists using DALL·E or Midjourney to design visuals inspired by AI-composed tracks.

Summarization and Knowledge Work

- Condense long articles, emails, or meeting transcripts

- Generate executive summaries or key-point digests

- Draft survey forms, presentation outlines, or project briefs

Example: Teams using AI to summarize internal documents, surface insights, and support faster operational decisions.

Generative AI Examples in Real-World Enterprises

Generative AI has moved beyond experimentation. Organizations across industries are already deploying it to improve productivity, decision-making, and customer engagement.

A few representative examples include:

- Snap Inc. (Snapchat) uses an AI assistant, My AI, to enable conversational experiences, feature discovery, and personalized recommendations within the app.

- Bloomberg developed BloombergGPT, a domain-specific language model trained on proprietary financial data to support content generation and internal query workflows.

- Duolingo uses AI-powered tutoring to deliver personalized feedback and explanations to learners.

- Slack leverages generative AI to summarize conversations and extract insights from internal knowledge.

- Riseup Labs helps enterprises design, integrate, and operationalize generative AI solutions—embedding AI into existing systems, automating workflows, and ensuring outputs are grounded, secure, and aligned with real business objectives.

Together, these examples show how generative AI is being embedded into real products, workflows, and decision systems, signaling its transition into an enterprise-ready technology.

What Are the Applications of Generative AI Today?

Generative AI is being used across industries to automate tasks, enhance creativity, and improve productivity. From writing marketing copy to designing new products, it enables machines to create content that once required human imagination and labor.

“AI will bring a lot of benefits to the world … but we must ensure it benefits everyone.” – Yann LeCu

Below are key application areas, with real-world examples:

1. Content & Communication

Generative AI tools can draft, rewrite, or personalize language in various formats.

- Use Cases:

- Email writing

- Blog posts and SEO content

- Social media captions

- Chatbots and customer support

- Example Tools: Jasper, ChatGPT, Copy.ai

2. Visual Creation & Design

AI can produce high-quality images, designs, and illustrations from text prompts or sketches.

- Use Cases:

- Product mockups

- Ad creatives and graphics

- Book covers and concept art

- Game and film visual design

- Example Tools: Midjourney, DALL·E, Canva AI

3. Audio, Music & Voice

Generative models can compose music or synthesize human-like speech.

- Use Cases:

- Podcast intros

- Voice assistants and dubbing

- AI-generated jingles or beats

- Language learning tools

- Example Tools: ElevenLabs, Aiva, Google’s MusicLM

4. Programming & Software Development

AI can write and debug code, suggest functions, or generate full apps from prompts.

- Use Cases:

- Autocomplete for developers

- Code translation and refactoring

- Building no-code tools from descriptions

- Example Tools: GitHub Copilot, Replit Ghostwriter

5. Industry-Specific Applications

- Healthcare:

- Synthesizing patient data for simulations

- AI-generated radiology reports

- Drug discovery (e.g., generating molecular structures)

- Finance:

- Report summarization

- Risk modeling using synthetic data

- Fraud scenario simulation

- Education:

- Personalized learning content

- Automatic test creation

- Essay feedback tools

6. Simulation, Gaming & Virtual Worlds

- Dynamic storyline generation

- NPC dialogue scripting

- Procedural world-building

- Voice-driven character interaction

Business Value Snapshot:

| Application | Value Delivered |

|---|---|

| Marketing copy generation | Speed + personalization |

| AI customer support | 24/7 availability with natural conversation |

| AI image generation | Rapid visual content at low cost |

| Code assistants | Faster development cycles |

| Synthetic training data | Reduced privacy risk, more model accuracy |

Generative AI is not just hype; it’s already embedded in business tools, creative workflows, and professional services. As capabilities expand, its use cases will become even more dynamic and tailored.

Benefits of Generative AI

Generative AI delivers value by boosting creativity, increasing efficiency, and enabling personalization at scale. It helps individuals and organizations produce high-quality content faster, often with fewer resources, and opens up new ways of solving problems.

Here are the most impactful advantages:

1. Faster Content Creation

- Automates writing, design, and coding tasks that would otherwise take hours or days

- Great for marketers, writers, developers, and educators

- Example: Generating a product description in seconds instead of manually writing one

2. Enhanced Creativity & Ideation

- Sparks new ideas or variations (e.g., multiple image styles or blog openings)

- Works well for brainstorming, prototyping, or exploring concepts

- Helps overcome creative blocks by offering AI-generated inspiration

3. Personalization at Scale

- Tailors messaging, visuals, or product recommendations based on user data

- Enables dynamic content for different audiences or segments

- Common in email marketing, online learning, and customer engagement

4. Increased Productivity

- Supports professionals with AI copilots or assistants

- Automates routine or repetitive tasks (e.g., summarizing meeting notes, rephrasing emails)

- Lets teams focus on higher-level thinking and decision-making

5. Cost Efficiency

- Reduces dependency on outsourced creative services or manual labor

- Enables startups and small teams to produce enterprise-level outputs

- Cuts down on revision cycles by offering multiple drafts instantly

6. Democratization of Skills

- Empowers non-experts to create in areas like design, writing, and coding

- Makes complex technologies more accessible to educators, students, and creators

- Lowers the barrier to entry across industries

7. Strategic Differentiation for Businesses

- Creates unique brand experiences (e.g., AI-powered virtual advisors)

- Drives innovation in customer interaction and product development

- Supports data-driven experimentation (e.g., testing thousands of ad variations)

Generative AI isn’t just about speed; it’s about scaling intelligence, creativity, and personalization in ways that weren’t possible before.

What Are the Risks and Ethical Concerns of Generative AI?

While generative AI brings innovation and efficiency, it also raises serious concerns around accuracy, misuse, ethics, and sustainability. The risks span from misinformation and copyright disputes to energy demands and new security threats. Understanding these issues is crucial for adopting AI responsibly.

1. Misinformation, Hallucination & Deepfakes

Generative AI systems can ‘hallucinate,’ producing outputs that sound factual but are not grounded in verified or up-to-date information. These errors can have real-world consequences. For example, Air Canada was sued after its chatbot shared incorrect refund information. Deepfake images and videos further blur truth boundaries, enabling disinformation or fraud.

Example: Fake videos of public figures or AI-generated news articles spreading false narratives.

To address this limitation, many organizations use retrieval-augmented generation (RAG), which allows models to reference trusted internal documents or external knowledge sources before generating responses.

2. Fake Citations & Academic Misuse

AI models can generate convincing but nonexistent references. Several law firms have been penalized for submitting briefs containing fabricated legal citations. A Stanford professor’s expert testimony was dismissed for including fake citations generated by ChatGPT, ironically, in a case about deepfakes.

Implication: Users must verify AI-generated research to maintain academic and professional integrity.

3. Bias, Discrimination & Fairness

AI models often absorb social and cultural biases from their training data. Outputs can unintentionally reinforce stereotypes or exclusionary patterns. For instance, AI-generated job ads or facial recognition systems may favor certain demographics.

Challenge: Ensuring fairness requires transparent datasets and continuous bias audits.

4. Copyright, IP & Terms of Service Violations

Copyright and data ownership laws have not yet caught up with generative AI. Many models are trained on copyrighted works without explicit permission, raising questions about fair use and creative ownership. In addition, some AI companies have been accused of violating publishers’ terms of service (TOS) during data scraping, e.g., disputes between OpenAI, Microsoft, and The New York Times.

Risk: Enterprises using these models could face legal exposure even when using “open” models.

5. Openwashing & Lack of Transparency

Some AI providers claim to offer “open” models but impose hidden restrictions. Licensing terms may include fees after certain usage thresholds or restrict commercial competition. Many “open” models also fail to disclose their training data, limiting transparency and making bias detection harder.

Takeaway: True openness requires full disclosure of data sources and licensing clarity.

6. Data Sweatshops & Human Exploitation

Behind AI’s automation lies human labor. Many datasets are annotated by low-wage workers in developing countries, sometimes exposed to graphic or distressing content without adequate protection. These “data sweatshops” raise ethical and mental health concerns.

Impact: The hidden human cost of training AI models must be acknowledged and regulated.

7. Environmental & Energy Impact

Training large AI models consumes significant energy and water resources. Mega data centers powering generative AI strain electrical grids and raise local utility rates. The carbon footprint of large-scale AI infrastructure has become a growing environmental concern.

Example: GPT-3’s training reportedly used hundreds of megawatt-hours of energy.

8. New Security Threats & Cyber Risks

Generative AI can inadvertently empower attackers. Models can help craft sophisticated phishing emails, fake voices, or deepfake videos mimicking trusted executives. On a technical level, AI systems can identify vulnerabilities faster than traditional methods, making defensive cybersecurity more complex.

Result: Enterprises must strengthen verification and authentication systems to counter AI-enabled threats.

9. Loss of Human Oversight & Over-Reliance

Overconfidence in AI outputs can lead to poor judgment or costly mistakes. Users might skip verification steps or defer too much decision-making to AI. In sensitive fields like law, healthcare, or finance, unchecked AI outputs can lead to real-world harm.

Solution: Keep human review at the center of all generative AI workflows.

10. Regulatory and Ethical Uncertainty

Governments worldwide are racing to establish AI governance standards. Emerging regulations like the EU AI Act and proposed U.S. AI Bill of Rights focus on transparency, explainability, and accountability. AI watermarking and labeling initiatives aim to make synthetic content traceable.

Challenge: Organizations must stay agile as laws evolve to ensure compliance and ethical alignment.

Generative AI must be developed and deployed responsibly with transparency, fairness, and human oversight. Its promise is immense, but so are its pitfalls. Addressing issues like misinformation, bias, copyright, data ethics, and sustainability will determine whether AI becomes a tool for progress or a source of disruption.

Where Is Generative AI Going?

Generative AI is evolving rapidly from powering chatbots and image tools to shaping the future of multimodal interfaces, responsible AI, and real-time personalization. As models get faster, more flexible, and better regulated, their role in everyday life will deepen.

“This is going to impact every product across every company.” – Sundar Pichai, Google

Here’s where the field is headed:

1. Multimodal AI: Beyond Just Text or Images

- Models are now trained to handle multiple data types at once: text, image, audio, and video.

- Multimodal systems allow users to upload an image and ask questions about it, or generate code from a voice command.

Emerging Examples:

- OpenAI’s GPT-4 with image input

- Google Gemini is integrating voice, vision, and text

- AI tools that take a photo and suggest edits, captions, or responses

2. Memory-Enhanced and Agentic Systems

- Newer generative systems can remember past interactions, goals, or user preferences over time.

- This leads to more helpful AI “agents”, not just tools, but assistants that can reason and act.

Use Cases:

- Personal tutoring

- Executive support

- Task planning and automation

3. Smaller, More Efficient Models

- Instead of relying on massive centralized models, lightweight “edge” AI will run on local devices.

- Open-source alternatives (e.g., LLaMA 3, Mistral) are gaining traction for private or offline use.

Impact:

- Faster response times

- Lower cost and energy use

- More secure data handling

4. Responsible AI: Ethics, Safety & Governance

- Governments and companies are developing policies around bias testing, transparency, and auditability.

- Tools for watermarking AI content, identifying hallucinations, and improving explainability are in active development.

Key Developments:

- EU AI Act (2024–2025)

- Industry-wide pushes for AI labeling, consent mechanisms, and ethical training standards

5. Embedded AI in Everyday Tools

- Generative AI will increasingly be built into productivity suites, operating systems, and physical devices.

- “Invisible AI” will write emails, summarize docs, translate meetings, or generate visual assets without explicit prompts.

Examples:

- Microsoft Copilot in Office

- Adobe Firefly in Photoshop

- Generative tools in CRM, HR, and design platforms

What to Expect Next:

- Greater control: Users will guide tone, format, and creativity level.

- More collaboration: AI tools will “co-create” instead of just generating output.

- Real-time intelligence: AI will respond dynamically to user goals, not just static prompts.

Generative AI is shifting from a passive tool to an active collaborator capable of understanding context, retaining memory, and supporting goal-driven tasks.

Generative AI Is Redefining Creation and Intelligence

Generative AI is not a passing trend; it’s a foundational shift in how we produce, personalize, and interact with information. From language and images to code and music, this technology allows machines to generate content that feels remarkably human.

In this guide, we’ve covered:

- What generative AI is and how it differs from traditional AI

- The core models and technologies behind it (transformers, GANs, diffusion)

- Key applications across industries like marketing, healthcare, and software

- The advantages it offers in speed, creativity, and personalization

- The risks around misinformation, bias, IP, and oversight

- What’s coming next from multimodal tools to agentic AI

Whether you’re a learner, business decision-maker, or developer, understanding how generative AI is operationalized is now essential. It will continue to shape how we work, create, and communicate, raising new opportunities and new responsibilities.

“The real challenge for organizations is no longer understanding what generative AI is, but deciding how to deploy it responsibly, securely, and at scale.”

FAQs: Common Questions About Generative AI

What is generative AI in simple terms?

Generative AI is a type of artificial intelligence that creates new content like text, images, or code by learning from existing data. It generates original outputs rather than just analyzing or categorizing inputs.

How is generative AI different from traditional AI?

Traditional AI focuses on predictions or classifications (e.g., spam detection), while generative AI produces new content (e.g., writing an email or generating an image) based on learned patterns.

What are examples of generative AI tools?

Popular tools include ChatGPT (text), DALL·E and Midjourney (images), GitHub Copilot (code), and ElevenLabs (voice). These tools turn user prompts into creative, usable outputs.

What types of content can generative AI create?

It can generate text (articles, emails), images (illustrations, designs), audio (music, speech), video, and even software code.

How does a transformer model work in generative AI?

Transformers use attention mechanisms to understand relationships between words or tokens in a sequence, enabling coherent text generation or translation.

What are GANs, and how do they generate images?

GANs (Generative Adversarial Networks) pit two neural networks, a generator and a discriminator, against each other to create realistic-looking images.

Is generative AI safe to use?

It’s generally safe when used responsibly, but concerns include misinformation, bias, data privacy, unpredictable behavior in production, and over-reliance without human oversight. Human oversight, governance, and continuous monitoring are essential, especially at scale.

Who owns AI-generated content?

Ownership depends on local laws and the platform used. In many cases, AI-generated outputs may not be protected by copyright unless significantly modified by a human.

Can anyone use generative AI, or do you need technical skills?

Most generative AI tools are user-friendly and designed for non-technical users. However, deeper use (e.g., fine-tuning models) may require coding experience.

What’s the future of generative AI?

Generative AI is evolving toward multimodal interaction, smarter agents, and tighter ethical controls. It will become more personalized, explainable, and embedded in everyday tools.

Does generative AI actually understand what it’s saying?

Not in a human sense. It generates responses based on patterns and probabilities learned from data, not true understanding or intent.

Is generative AI just copying existing content?

No. It doesn’t retrieve exact copies but generates new outputs based on learned patterns, though similarities can occur depending on prompts and data.

Can generative AI replace my job?

In most cases, it augments work rather than replaces it. Jobs involving repetitive tasks are more affected, while roles requiring judgment and accountability still rely on humans.

How accurate is generative AI?

It can be very useful but not always accurate. Outputs should be reviewed, especially for factual, legal, or medical content.

Can generative AI work with my company’s internal data?

Yes, when implemented with proper data integration techniques like retrieval-augmented generation (RAG).

Why does generative AI sometimes “hallucinate”?

Because it predicts likely responses rather than verifying facts, especially when it lacks access to reliable data.

Does generative AI learn from my conversations?

It depends on the platform and settings. Enterprise deployments typically disable learning from user inputs for privacy reasons.

This page was last edited on 23 December 2025, at 5:49 pm

How can we help you?