Cloud-native AI application development combines the agility of cloud-native architectures with the intelligence of modern AI systems, transforming how organizations design, deploy, and scale digital solutions. As AI adoption accelerates, the challenge is no longer whether to use AI, but how to implement it efficiently, securely, and in a way that remains adaptable to changing platforms and technologies.

This article provides a practical, vendor-neutral guide for professionals focused on real-world implementation. It outlines modern architectural approaches, actionable development steps, and proven practices for building AI applications cloud-natively. By the end, you will have a clear understanding of core principles, common pitfalls to avoid, and scalable blueprints to accelerate production-ready AI initiatives.

Summary Table: Key Takeaways from Cloud-Native AI Application Development

| Topic | Key Takeaway |

| What is Cloud-Native AI? | AI apps built with containers, microservices, and cloud-native automation for scalability. |

| Cloud-Native vs. AI-Native | Cloud-native focuses on scalable ops; AI-native centers on continuous AI learning. |

| Architecture Components | Layers include orchestration, microservices, serverless, gateways, and monitoring. |

| Leading Stacks/Tools | Kubernetes, MLflow, Kubeflow, Prometheus, and open API gateways are widely adopted. |

| Application Lifecycle | Pipeline spans data ingestion to monitoring, emphasizing CI/CD and MLOps integration. |

| Security & Responsible AI | Govern data, models, access, and ethics with container security, audits, and bias checks. |

| Monitoring & Cost | Implement robust observability and autoscaling for performance and budget control. |

| Integration with Legacy | Use APIs, data pipelines, and gateways to modernize without disrupting core systems. |

| Industry Use Cases | Broad adoption—from healthcare imaging to retail personalization and smart manufacturing. |

| Key Challenges & Solutions | Focus on scaling, observability, vendor neutrality, and legacy adaptation strategies. |

| Future Trends | Adoption of agent-based AI, serverless GPU, and LLMOps will shape coming years. |

What Is Cloud-Native AI Application Development?

Cloud-native AI application development is the process of designing, building, and deploying AI applications using modern cloud-native principles—such as containerization, microservices architecture, and automated CI/CD pipelines—to maximize scalability, resilience, and agility across diverse environments.

Key characteristics of cloud-native AI app development:

- Containerization: Each AI service or model runs in its own container for isolation and portability.

- Microservices: Applications are split into small, independently deployable services.

- Orchestration: Tools like Kubernetes automate deployment, scaling, and management.

- CI/CD for AI: Automated build, test, and deployment pipelines speed up iteration.

- Observability: Continuous monitoring and tracing for performance and transparency.

How it differs from adjacent concepts:

While AI application development focuses on building and deploying AI solutions, cloud-native AI brings in methodologies from cloud-native software engineering—like microservices and orchestration—enabling those AI apps to scale flexibly across clouds, improve uptime, and respond quickly to business needs.

Cloud-Native vs. AI-Native Development: How Are They Different?

Cloud-native and AI-native development are related but distinct paradigms, each with unique strengths.

Understanding their differences is vital for making informed architecture and process decisions.

| Aspect | Cloud-Native Development | AI-Native Development |

| Core Philosophy | Distributed, resilient, and scalable apps | Model-driven, data-centric pipelines |

| Primary Focus | Microservices, containers, orchestration | Continuous model training and integration |

| Pipeline/Process | CI/CD, DevOps, automated app ops | Continuous learning, model (re)deployment |

| Key Technologies | Kubernetes, Docker, Serverless/FaaS, DevOps tools | ML frameworks, MLOps stacks, feature stores |

| When to Use | General software/app workloads with scalability | AI/ML apps needing frequent retraining/tuning |

Example:

- Cloud-native approach: Building a recommendation engine where the AI model is deployed as a microservice inside Kubernetes.

- AI-native approach: Creating self-improving systems, like autonomous vehicles, where models require constant retraining on new or real-time data.

Choosing the right approach often means blending strategies—cloud-native methods for resilient deployment, AI-native for dynamic, continuous learning.

What Are the Core Components of Cloud-Native AI Architecture?

A robust cloud-native AI architecture consists of layered, modular components designed for flexibility, scalability, and fast iteration.

Core architecture components:

- Containers: Encapsulate application code, dependencies, and AI models for portability.

- Orchestration Platform: Kubernetes or OpenShift automate rolling updates, scaling, and failover for AI services.

- Microservices: Small, independently deployable units enable parallel development and easy scaling of AI features.

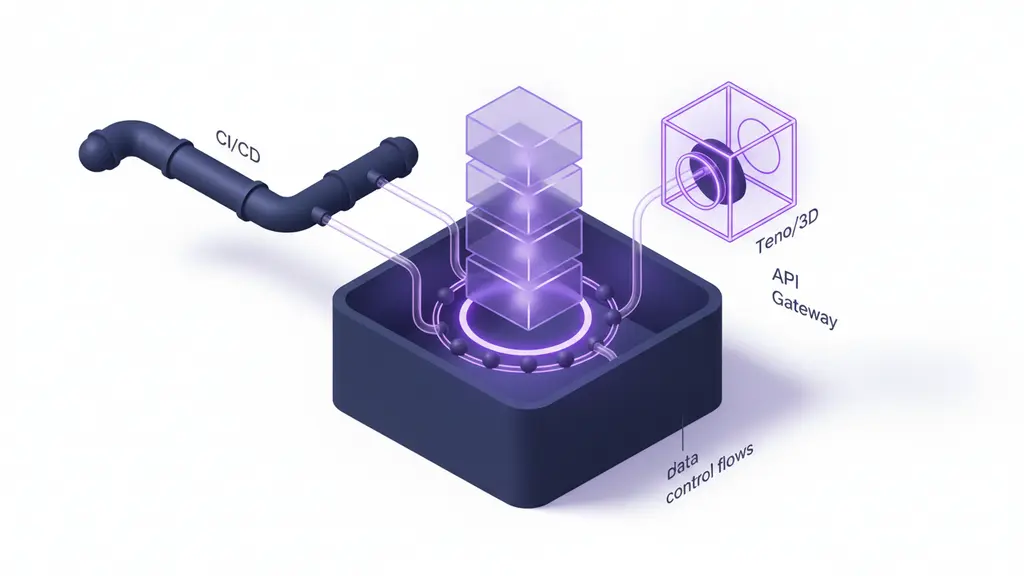

- CI/CD Pipelines: Automated processes for building, testing, and deploying code and ML models quickly and safely.

- Serverless/FaaS (Function as a Service): For event-driven AI workloads and elastic inference (e.g., image recognition on demand).

- AI Gateway or API Management Layer: Routes inference requests, manages throttling, load balancing, and integrates agent-based LLM routes.

- Observability & Monitoring: Collects logs, traces, and metrics from both application and ML models.

- Agent-Based Patterns: Modern architectures that route tasks between multiple AI agents or LLMs (Large Language Models) for complex workflows.

Architecture diagram concept (visualize as stack):

- Presentation/API Layer (External endpoints, APIs, agent routing)

- Application/Microservices Layer (Stateless AI/ML services, business logic)

- Orchestration Layer (Kubernetes/OpenShift)

- Data Layer (Feature stores, model repositories, datasets)

- Infrastructure Layer (Cloud compute, storage, networking)

Which Technology Stacks and Tools Enable Cloud-Native AI?

Selecting the right vendor-neutral technology stack is critical for effective cloud-native AI application development.

A mix of open-source and managed services underpins leading enterprises in adopting scalable, flexible AI.

| Function | Leading Tools & Frameworks | Notable Strengths |

| Orchestration | Kubernetes, OpenShift | Portable, resilient, large ecosystem |

| Containerization | Docker, Podman | Widely supported, standard for images |

| Model Ops (MLOps) | MLflow, Kubeflow, KServe | Reproducible ML lifecycle, model serving |

| CI/CD Pipelines | Jenkins, Tekton, ArgoCD | Automate build/test/deploy for AI |

| Data/Feature Stores | Feast, Delta Lake | Manages features for ML at scale |

| Observability | Prometheus, Grafana, OpenTelemetry | Metrics, logging, tracing |

| Serverless/FaaS | Knative, Kubeless | Event-driven AI, uncluttered scaling |

| API/ML Gateway | Kong, NGINX, AI Gateway (OSS) | Secure, manage LLM and ML traffic |

For different use cases:

- NLP or LLM-Driven Apps: Hugging Face Transformers + KServe + Kubernetes

- Computer Vision: TensorFlow Serving + TorchServe + GPU-enabled Kubernetes nodes

- Startups: Favor open-source CI/CD (ArgoCD) and model management (MLflow)

- Enterprises: May integrate with enterprise orchestrators (OpenShift), advanced monitoring, and compliance tools

Vendor-agnostic stacks ensure flexibility and portability, reducing long-term risk of lock-in.

What Does the Cloud-Native AI Application Lifecycle Look Like?

The cloud-native AI application lifecycle consists of distinct, repeatable stages, providing a clear roadmap from idea to ongoing operations.

Typical lifecycle stages:

- Data Collection & Preparation

Gather, clean, and structure data—often ingesting from cloud or legacy systems. - Model Development

Train, validate, and select best-performing AI/ML models using scalable compute resources. - Model Packaging & Validation

Serialize models (e.g., using ONNX, pickle) and test them in isolated, containerized environments. - CI/CD Pipeline

Automate testing and deployment of both code and models via modern build pipelines. - Deployment & Orchestration

Roll out containerized AI services using orchestrators like Kubernetes, with blue/green or canary deployments. - Scaling & Autoscaling

Dynamically adjust resources to match demand—critical for fluctuating inference loads. - Monitoring & Observability

Continuously track model health, performance, drift, and system resource usage. - Feedback & Continuous Improvement

Capture real-world data/feedback to trigger model retraining (driving MLOps).

Checklists for each phase:

- Data readiness: Data schemas standardized, lineage tracked.

- Model handoff: Models saved in versioned registry; API contract defined.

- Deployment: Automated through pipelines with strong rollback and logging.

- Monitoring: Alerts set for latency, errors, drift.

- Continuous ops: Routine audit for model bias, explainability, and compliance.

A visual workflow diagram can clarify this end-to-end lifecycle for your team.

How Do You Secure, Govern, and Ensure Responsible AI in Cloud-Native Environments?

Securing and governing cloud-native AI applications is critical for protecting intellectual property, sensitive data, and ensuring ethical AI outcomes.

Best practices in security, governance, and responsible AI:

- Container Security:

– Use image scanning to detect vulnerabilities before deploy.

– Enforce Role-Based Access Control (RBAC) throughout the stack. - Pipeline and Model Governance:

– Track data and model lineage for auditability.

– Maintain audit trails of every model change.

– Comply with standards (such as HIPAA for healthcare, GDPR for PII). - API and Data Security:

– Secure API keys and endpoints.

– Encrypt sensitive data in transit and at rest. - Responsible & Ethical AI:

– Test models for bias using open-source tools.

– Implement model explainability and transparency dashboards.

– Check for hallucinations (false outputs), especially in LLM-driven apps. - Open Source and Automated Tools:

– Use tools like Trivy (container security), Fairlearn (bias), MLflow Model Registry (traceability).

Security/Governance checklist:

- All containers scanned and patched for known vulnerabilities

- Access restricted and auditable

- Compliance (HIPAA/GDPR/industry) mapped

- Models validated for bias and explainability

- Comprehensive logging and model lineage tracking active

What Are Best Practices for Monitoring, Observability, and Cost Management?

Modern cloud-native AI applications demand comprehensive observability and diligent cost management to ensure performance, compliance, and business value.

Key best practices:

- Monitoring Stack:

– Metrics collection (Prometheus) for system health; logging (ELK or EFK stack); distributed tracing (OpenTelemetry) to spot bottlenecks. - AI Observability:

– Track not just system metrics, but also model performance—accuracy, latency, and drift.

– Monitor token/API usage and hardware costs (especially GPUs for inference). - Cost Management:

– Use autoscaling and serverless to right-size resources according to real-time demand.

– Track resource spend across projects/teams and set automated budget alerts. - Common Pitfalls:

– Neglecting drift monitoring or infrequent drift checks.

– Over-provisioning for “worst-case” loads, leading to cost overruns.

– Lack of transparency in multi-cloud or hybrid environments.

Checklist for observability:

- All AI/model metrics piped into central dashboard

- Automated alerts for drift, anomalies, and resource spikes

- Distributed tracing implemented for root-cause analysis

- Cost/utilization dashboards visible to stakeholders

- Regular reviews to tune autoscaling and limit waste

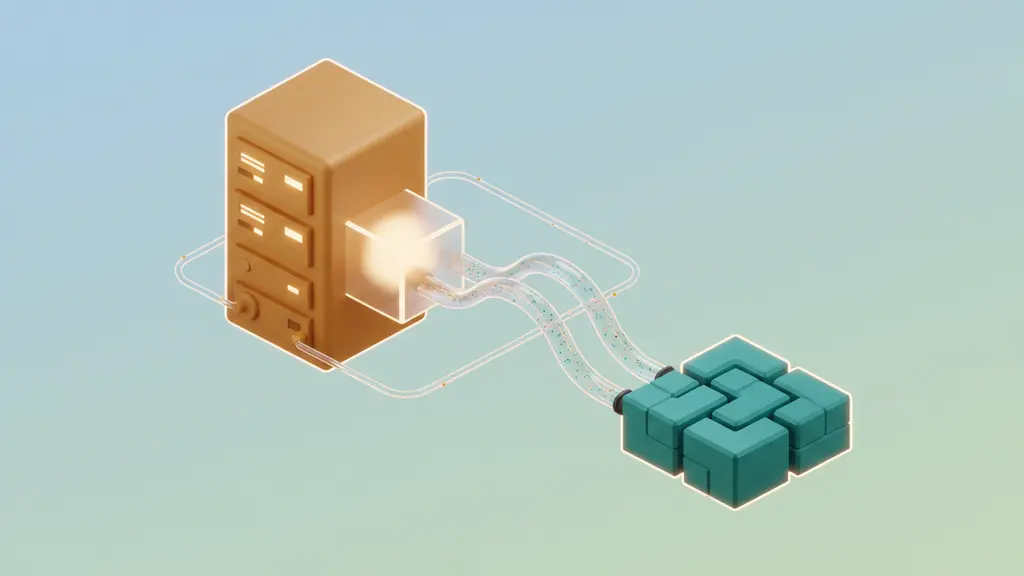

How to Integrate Cloud-Native AI with Legacy Systems

Integrating cloud-native AI with legacy systems enables organizations to deploy advanced intelligence without a total infrastructure rewrite.

Common integration patterns include:

- API Wrappers: Encapsulate legacy functions/services to communicate with modern APIs.

- Data Pipelines: Extract, transform, and load (ETL) from legacy databases for AI model consumption.

- Gateway and Protocol Adaptation: Employ an API Gateway to bridge old protocols (SOAP, RPC) to modern REST/GraphQL or event-driven APIs.

- Hybrid Cloud Deployments: Mix on-premise legacy with public cloud AI services for gradual migration.

Step-by-step integration checklist:

- Assess: Catalog legacy systems, data formats, and integration points.

- Design: Determine appropriate adapter pattern (API, data, protocol).

- Isolate: Decouple AI-specific functions from monolithic apps if possible.

- Build: Develop containerized adapters or wrappers.

- Test: Ensure end-to-end data flows and responses.

- Migrate Gradually: Use blue/green or canary approaches to reduce disruption.

- Document: Keep integration interfaces and dependencies thoroughly documented.

Case example:

A retailer evolves its ERP system by wrapping legacy inventory APIs, feeding real-time data into a Kubernetes-based recommendation engine. This allows rapid AI innovation without destabilizing vital core systems.

What Are the Leading Industry Use Cases and Success Stories?

Cloud-native AI is fueling transformation across healthcare, finance, retail, manufacturing, and more.

Sample case studies:

- Healthcare:

A hospital network accelerates disease detection by deploying a containerized AI model for imaging analysis, enabling seamless scaling during outbreaks. - Financial Services:

A fintech company builds a fraud detection microservice with Kubeflow and Kubernetes, reducing time-to-deployment for new detection models by 50%. - Retail:

An e-commerce giant employs agent-based AI for personalizing recommendations at scale, using an API gateway to manage traffic between legacy catalogs and modern AI services. - Manufacturing:

A smart factory adopts AI-powered defect detection, scaling image analysis workloads elastically via serverless GPU infrastructure.

These real-world examples underscore the flexibility and ROI of combining cloud-native design with cutting-edge AI.

What Common Challenges and Solutions Exist in Cloud-Native AI Development?

Building AI applications cloud-natively presents specific hurdles—but also proven strategies for overcoming them.

| Challenge | Problem | Solution |

| Scaling inference workloads | Spiking demand leads to latency/downtime | Use autoscaling, serverless/FaaS, GPU pools |

| Security & compliance exposures | Sensitive data/model theft risk | RBAC, encryption, image scanning, compliance |

| Vendor lock-in | Over-dependence on specific cloud or tool | Favor open standards, modular stack choices |

| Legacy integration | Data silos, protocol mismatch, integration delays | API gateways, protocol adapters, phased mig. |

| Observability gaps | Hard to debug/optimize hybrid systems | Centralized monitoring, distributed tracing |

| Managing model drift | AI decisions degrade over time | Routine drift detection, retraining pipelines |

Proactively addressing these with best practices, modular architectures, and open-source tools accelerates success.

What Trends Will Shape the Future of Cloud-Native AI?

Rapid shifts in both AI and cloud-native computing are shaping the next generation of intelligent applications.

Key emerging trends:

- Agent-Based and LLM-Native Architectures:

Increased adoption of AI “agents” and large language models orchestrated natively in the cloud. - LLMOps:

MLOps practices tailored to the unique needs of large language models—tracking context, prompt, and performance. - Serverless GPU:

Elastic GPU resources as a service, enabling event-driven inference without overprovisioning. - Model Context Protocol (MCP):

New standards for routing and managing context between AI models and agents. - Full-Stack Observability:

Next-gen observability focused on both system and model performance, cost tracing, and root-cause analysis. - Edge-to-Cloud Learning:

Smarter AI systems adapt in real time, pushing training and inference closer to where data is generated.

Organizations that embrace these trends position themselves for greater AI agility and innovation.

FAQ: Cloud-Native AI Application Development

What is cloud-native AI application development?

Cloud-native AI application development is the process of building, deploying, and managing AI solutions using cloud-native approaches like containers, microservices, orchestration, and CI/CD, to achieve scalable and resilient AI systems.

How does cloud-native differ from AI-native development?

Cloud-native development emphasizes scalable infrastructure and resilient deployment using cloud technologies, while AI-native focuses specifically on iterative, model-centric workflows for continuous learning and AI innovation.

What Are The Key Components Of A Cloud-Native AI Architecture?

The core components of cloud-native ai applications include containerization, microservices, Kubernetes based orchestration, CI CD pipelines for both application code and machine learning models, serverless functions, API gateways, and centralized observability and monitoring systems.

Which Tools And Frameworks Are Popular For Cloud-Native AI?

Popular, vendor neutral tools for building cloud-native ai applications include Kubernetes for orchestration, Docker for containerization, MLflow and Kubeflow for model lifecycle management, Prometheus and Grafana for monitoring, and ArgoCD or Tekton for CI CD automation.

How Do You Deploy Machine Learning Models Using Containers And Kubernetes?

To deploy cloud-native ai applications, trained models are packaged into container images, defined through Kubernetes deployment manifests, and rolled out using kubectl or automated CI CD pipelines for scalable and reliable inference management.

What Are Best Practices For Monitoring And Observability In Cloud-Native AI?

Effective monitoring for cloud-native ai applications involves tracking infrastructure metrics, model performance, anomalies, resource utilization, and cost. Centralized dashboards and automated alerts enable proactive detection and continuous optimization.

How Can Legacy Systems Be Integrated With Cloud-Native AI Applications?

Legacy systems can be integrated into cloud-native ai applications by exposing existing functionality through APIs, using data pipelines for ETL, applying API gateways to manage traffic, and deploying containerized adapters to support gradual modernization.

What Security And Compliance Considerations Are Unique To Cloud-Native AI?

Security for cloud-native ai applications includes protecting models from theft, securing ML pipelines, enforcing access controls, maintaining audit trails, managing data lineage, meeting regulatory requirements, and addressing AI bias and explainability.

What Are Common Challenges In Scaling Cloud-Native AI Applications?

Common challenges when scaling cloud-native ai applications include managing inference costs, ensuring end to end observability, avoiding vendor lock in, and integrating legacy systems. These are addressed through autoscaling, open source tooling, and phased migration strategies.

Which Industries Are Leading Adopters Of Cloud-Native AI Applications?

Industries such as healthcare, financial services, retail, and manufacturing are leading adopters of cloud-native ai applications, using them for diagnostics, fraud detection, personalization, demand forecasting, and predictive maintenance.

Conclusion: Getting Started with Cloud-Native AI—Next Steps

Cloud-native AI application development is reshaping how businesses deliver intelligent, scalable, and future-ready solutions.

By adopting vendor-neutral architectures, automating your development lifecycle, and focusing on best practices in security, monitoring, and legacy integration, your teams can unlock powerful AI capabilities while maintaining flexibility and control.

Ready to accelerate your cloud-native AI journey?

Download our step-by-step blueprint, explore leading open-source tools, or contact our team for architecture reviews and custom guidance. Start building AI systems that are not just smart—but scalable, secure, and adaptable for tomorrow’s innovations.

Key Takeaways

- Cloud-native AI applies containers, microservices, and automation for scalable AI deployment.

- Open, modular toolchains (e.g., Kubernetes, MLflow) boost portability and prevent lock-in.

- Responsible AI demands proactive security, governance, and bias management throughout the lifecycle.

- Industry success stories—from healthcare to retail—show broad, measurable ROI of this approach.

- Start with small, modular integrations and grow capabilities over time to minimize risk and maximize impact.

This page was last edited on 16 February 2026, at 12:56 pm

Contact Us Now

Contact Us Now

Start a conversation with our team to solve complex challenges and move forward with confidence.